Is AMD Overvalued?

What I'm Doing with my AMD Position

AMD stock has seen a massive 45% surge in just two weeks after the OpenAI deal.

In this article, I cover whether it’s time to sell or if there’s still upside potential.

The OpenAI Deal

AMD signed an agreement with OpenAI to supply MI450 “Helios” systems for up to 6 GW of capacity over the next five years. After OpenAI buys and deploys these systems and AMD’s share price reaches certain milestones, OpenAI will receive warrants. These warrants allow OpenAI to take a 10% stake in AMD, with a $600 per share strike price for the final tranche.

This deal has sparked some controversy because some argue OpenAI is getting AMD’s systems for free, as the warrants’ value is roughly equal to the value of the systems.

First, the goal of any management team is to create shareholder value. OpenAI must drive AMD’s share price higher to receive the warrants, while also deploying the systems. That is a clear positive for both the company and investors.

For shareholders, the upside is simple: the stock appreciates. For AMD, the deal is extremely valuable. Yes, they’re giving away some equity, but companies do this for many reasons. It’s not always negative and can be accretive, which I believe applies here.

BofA estimates the deal could add around $10 billion in EBIT for FY 2030. At a 20× multiple, that equals about $200 billion in added market cap for $100 billion in equity, spread over 5 years, and with stock price milestones that ensure shareholder benefit. I’d call that very accretive.

But the real benefits go beyond financials.

AMD will have, for the first time, the opportunity to deploy AI accelerators at gigawatt scale. It won’t be just CPUs or GPUs. With MI450, AMD finally has a rack-scale system with scale-up capabilities comparable to NVIDIA. There is no better moment to showcase this kind of product than through massive OpenAI deployments.

This partnership gives AMD validation and visibility, especially among hyperscalers. If their systems deliver performance close to NVIDIA’s Rubin and come at a lower TCO (total cost of ownership), then market share gains for AMD will be hard to stop.

This may even pressure NVIDIA to lower prices, which could compress margins. In fact, gross margins at NVIDIA appear to have peaked and are now compressing, though they’re still around 20% higher than AMD’s. There is a real chance those margins could converge in the next few years.

Finally, this deal gives AMD another very valuable benefit. AMD is doing a tremendous job with its software. From being a mess in 2023, to making it open source in 2024, which attracted a lot of developer attention, to now, in 2025, having a competitive open source ecosystem that gets closer to matching CUDA every day, as third-party analyst group SemiAnalysis has repeatedly noted.

Now, this deal gives OpenAI the incentive to contribute to AMD’s open source stack to make the inference performance of the MI450 accelerators top notch. There isn’t a better partner to help improve your software than OpenAI, and now AMD has the perfect chance to finally reach parity with CUDA and remove one of NVIDIA’s biggest advantages.

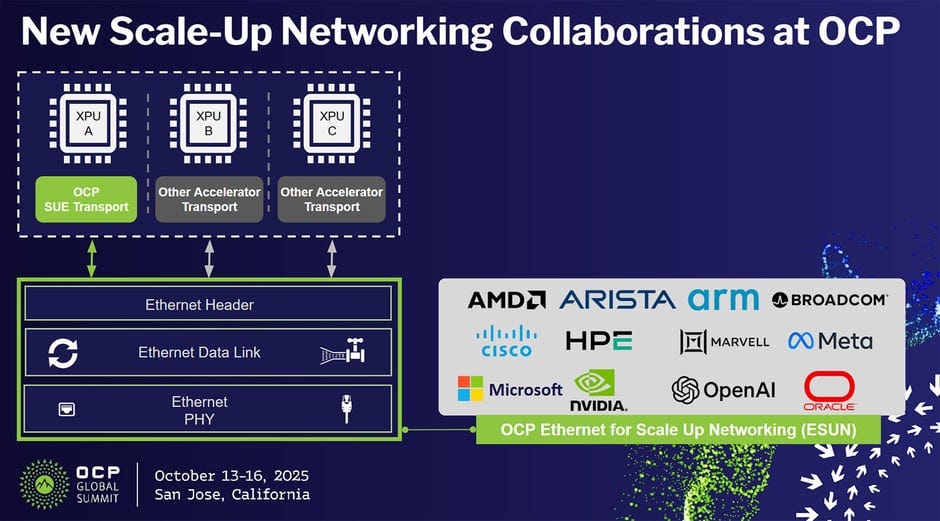

Another important aspect of the deal comes from the networking side. NVLink has allowed NVIDIA to scale up their racks like no one else in the industry. The only company with comparable performance is Google with their TPUs, which use proprietary ICI technology for scale-up. AMD has lagged behind in networking, but that’s about to change.

First, the MI450 is intended to match NVLink with AMD’s proprietary networking. But it doesn’t end there. The industry is moving toward a standardized scale-up fabric, which removes NVLink’s competitive advantage by developing open networking hardware that everyone can use. It’s the most efficient solution.

There are two important initiatives leading this effort:

ESUN (Ethernet for Scale-Up Networking)

An Open Compute Project workstream that keeps Ethernet as the scale-up fabric for AI clusters. It defines low-latency, lossless Ethernet behaviors, switch and NIC requirements, and interoperability so multiple vendors can build compatible racks. Backers include AMD, NVIDIA, Broadcom, Marvell, Arista, Cisco, Microsoft, Meta, OpenAI, Oracle, Arm, and HPE.

UALink Consortium

An open accelerator-to-accelerator fabric seeded from AMD’s Infinity Fabric. It runs a custom low-latency link layer over modern Ethernet PHYs, adds memory-style reads, writes, and atomics, and targets pods of up to 1,024 accelerators using dedicated UALink switches. Promoter members include AMD, Intel, Microsoft, Meta, Google, AWS, HPE, Cisco, Apple, Alibaba, and Synopsys.

AMD is involved in both initiatives and intends to adopt, by 2027 with MI500, whichever solution provides the best performance. The industry’s push for standardized, non-NVLink dependent hardware is massive, and NVLink’s moat is ending soon. Through this OpenAI deal, AMD will be able to bring this new standard to gigawatt scale. If it performs as expected, it will be a massive win for the open source standard compared with NVIDIA’s closed infrastructure.

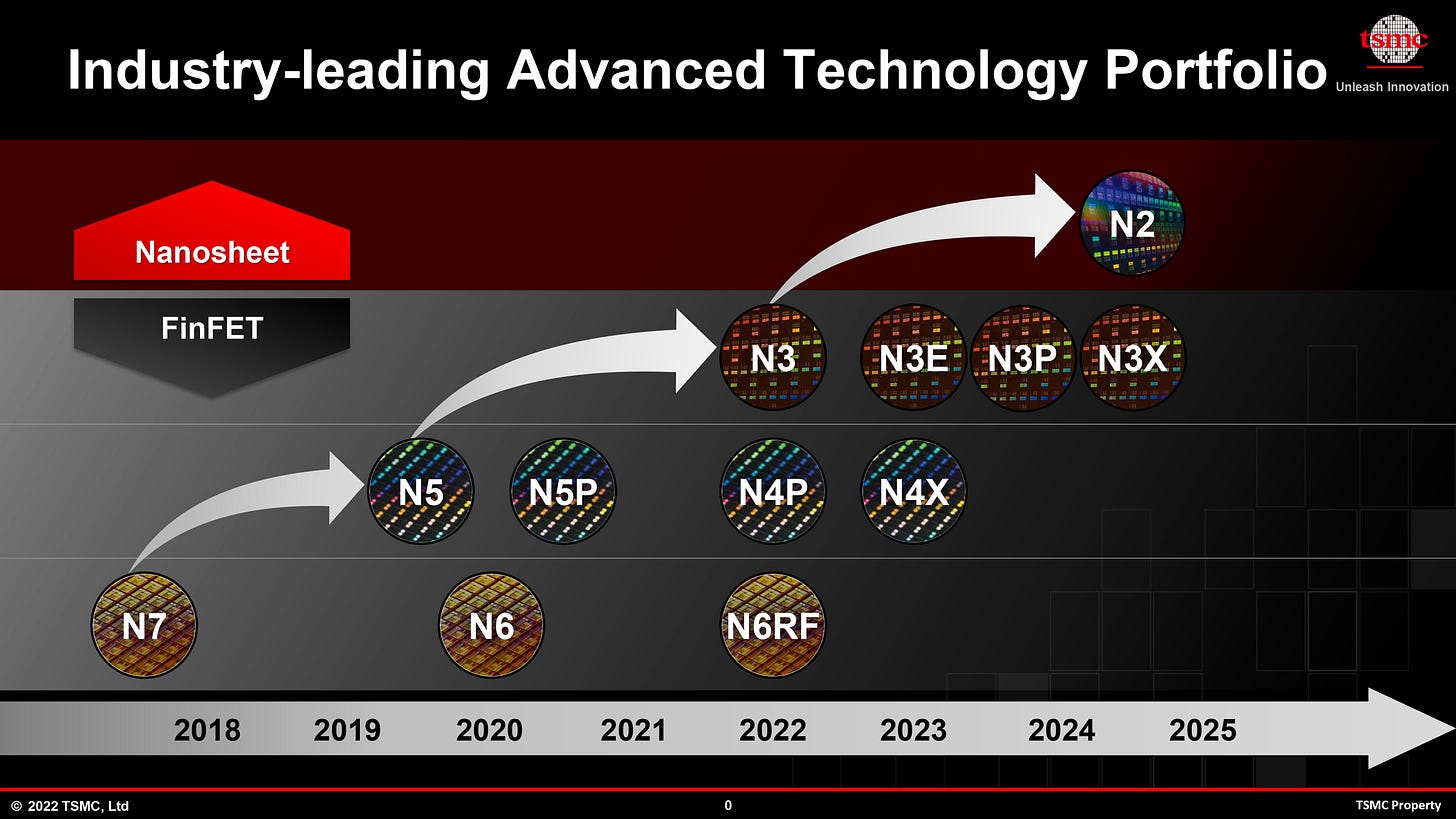

MI450 Will Use 2nm(N2)

Following this deal, AMD announced that the MI450 will be manufactured using TSMC’s 2nm platform, the company’s most advanced node, which has just gone online.

This node allows for roughly 15% higher performance and around 30% better efficiency per watt, in exchange for a 15% higher cost per wafer compared with the previous 3nm node, which is the one Rubin is planned for. NVIDIA may choose to move to 2nm as well, but that would be very difficult in practice.

TSMC, which will remain the main manufacturer of AI chips for the coming generations, won’t reach wafer processing peak for 2nm until 2028. AMD plans to start manufacturing on this node by Q3 next year. As a smaller player, AMD can secure enough capacity with what TSMC can provide. For NVIDIA, it would be much harder.

If NVIDIA wanted to use this node, it would need to design two Rubin chips, one for 2nm and another for 3nm. That would require separate teams and double the risk of delays or design issues. In this case, being smaller benefits AMD.

How This Affects NVIDIA

NVIDIA is generally fine. There’s enough market for everyone, but their margins could compress. And it’s not just because of AMD.

Currently, AMD isn’t even the second best in the world. This isn’t breaking news. The thesis with AMD has always been about future potential, not its current state. The second best right now is Google. Their TPUs can scale like NVIDIA’s systems, and their performance is good enough to train Gemini solely with their chips.

Although these chips carry the Google brand, they’re actually developed by Broadcom, which provides the IP and technical expertise. You could say the Google–Broadcom team is the true number two.

Historically, Google has kept TPUs for internal use, only renting the leftover capacity through its cloud. That’s now changing. In major AI industry news, Google reportedly decided to sell TPUs to other neoclouds.

This means it’s no longer just AMD pushing the pace. Google also wants a bigger share of a market that has been 90% dominated by NVIDIA since 2023.

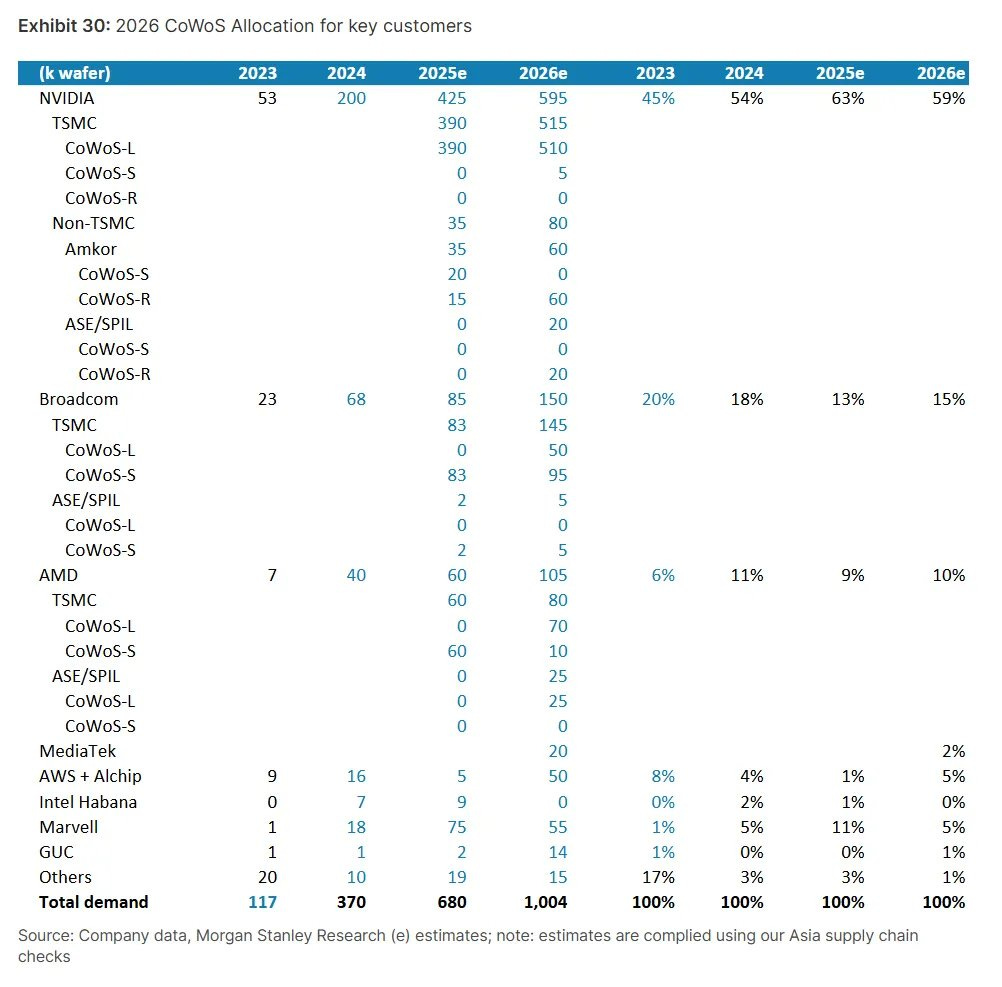

That was never sustainable, and COWOS allocation estimates from Morgan Stanley, made before the recent OpenAI deals with AMD and Broadcom, still pointed in this direction.

In this COWOS demand estimation from August, which serves as a proxy for AI accelerator market share, we can see that NVIDIA is expected to lose 4% of COWOS allocation, while AMD and Broadcom are expected to gain 1% and 2%, respectively. Amazon, which replaced Marvell with Alchip for the development of its next-generation ASICs, is also expected to grow its share.

After the recent OpenAI deals, I’d say the next COWOS allocation estimates will likely be even more skewed toward non-NVIDIA players. I don’t expect NVIDIA to start regaining market share anytime soon, especially with MI500 on the horizon.

Going forward

AMD MI450 is already a bestseller, but I believe we’ll likely see more deals. Oracle just announced plans to deploy 50,000 MI450 GPUs starting in H2 2026, and I wouldn’t be surprised to see Microsoft or Meta sign a deal as well.

Lisa Su highlighted that the MI450 is receiving a lot of attention and confirmed they are working with additional customers.

The MI300X was a great system. Its biggest problem wasn’t scale, it was the software. Despite the software issues, AMD still managed to sell a significant number of these systems to Meta and Microsoft. These customers didn’t buy MI325X or MI355X, which is understandable. NVIDIA left AMD behind in scale with Blackwell. However, now that the MI450 removes that limitation, and with AMD’s software stack reaching CUDA-worthiness, I believe we’re likely going to see Meta and Microsoft return as buyers.

These two hyperscalers don’t have ASIC development as advanced as Amazon, which has heavily invested in its own rack-scale systems. Meta and Microsoft have plans, but they’re still at an early stage and are not ready for 2026.

I also believe xAI is likely to purchase chips, as they’ve been clients of MI325X and Elon Musk has said “AMD chips work really well for mid-sized models.” Now that AMD’s systems can handle any model, the field is wide open.

I also see upside in sovereign AI deals. In the first half of 2025, Lisa Su traveled around the world meeting with government officials from key countries. I believe these efforts will bear fruit. AMD has already signed a deal with Humain, a company backed by Saudi Arabia, and I believe this is just the beginning. Many countries want proprietary data centers, control of their data, and sovereign AI capabilities. When AMD offers a product as competitive as NVIDIA’s at a lower price, the deals come more easily.

What I see is that the market is not pricing in at all is what I mentioned earlier: validation, a much higher likelihood of new contracts with hyperscalers, and software improvements. These are the kinds of qualitative factors the market typically ignores until they show up in the P&L.

Valuation

Keep reading with a 7-day free trial

Subscribe to Daniel Romero to keep reading this post and get 7 days of free access to the full post archives.