AMD: The next AI giant?

A new system designed to surpass NVIDIA, a massive order from Oracle, cutting-edge benchmarks, and a key collaboration with OpenAI. Is AMD a stock to hold for the AI race?

AMD just held what is probably the most important event in its history

AI accelerators, rack-scale systems, software breakthroughs, and major partnerships, including a collaboration with OpenAI.

Let’s walk through everything now that the dust has settled.

The big picture

Sometimes we miss the forest for the trees. What AMD is building isn’t just a product roadmap, it’s the foundation of a potential multi-trillion dollar company. That’s the first point Lisa Su emphasized. This is an AI-powered revolution that will transform the world, and AMD wants to be at the center of it.

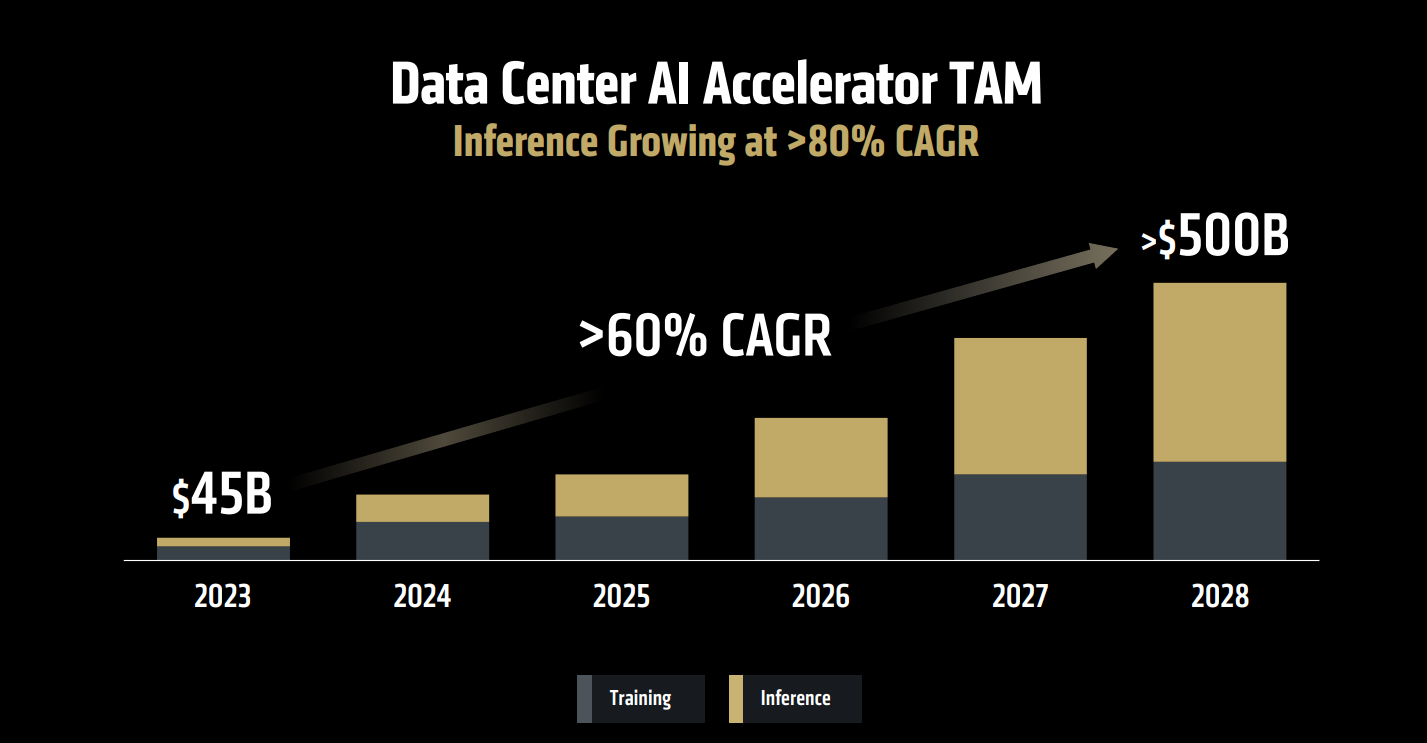

To illustrate the size of the opportunity, AMD shared market projections for AI accelerators, broken down by training and inference.

We’re talking about a 60 percent CAGR leading to a 500 billion dollar market in under four years, with inference growing at 80 percent CAGR. This is massive. If we apply a 20x sales multiple, given the high growth, that market becomes a 10 trillion dollar opportunity for AI accelerator producers.

But this isn't just about accelerators.

A 360 approach

There’s a clear opportunity on other fronts, where AMD aims to lead as well.

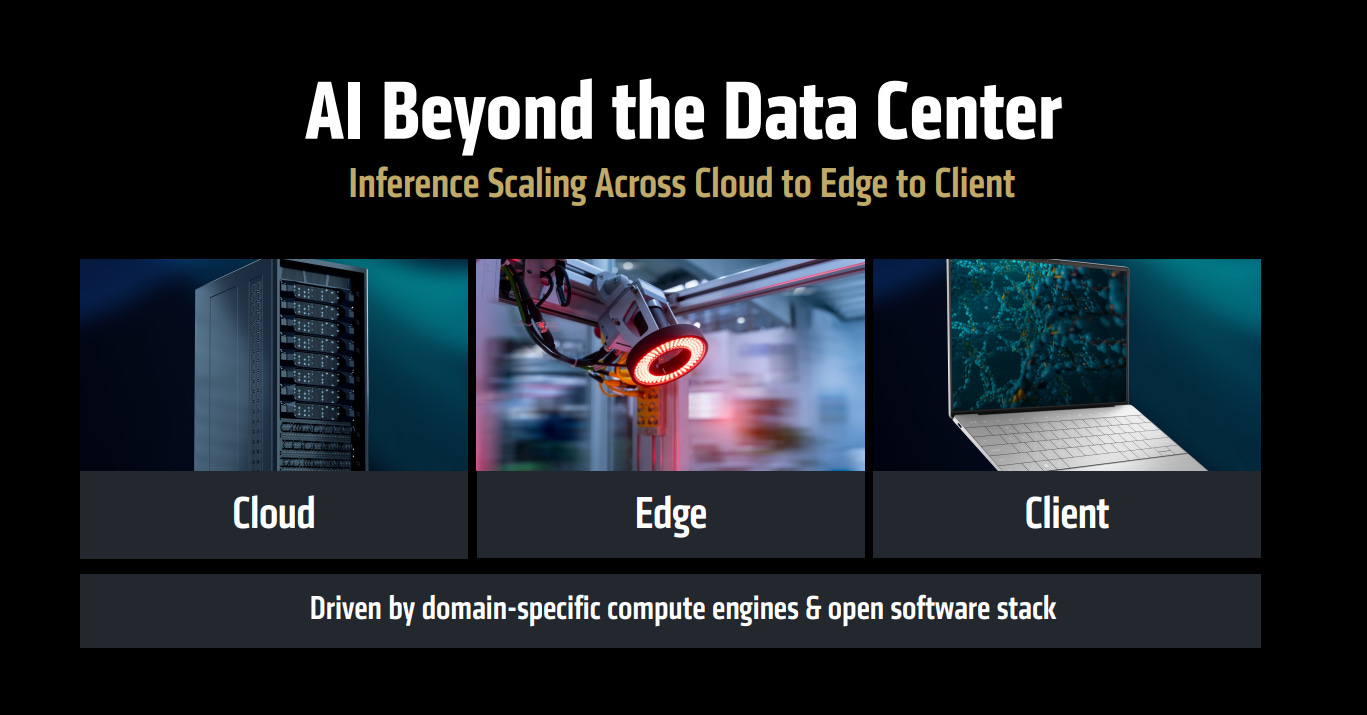

AMD offers the full package for AI in the cloud: GPUs, CPUs, DPUs, NICs…but it also aims to lead in AI at the edge through FPGAs, as well as in client-scale computing, where it already leads with its innovative APUs.

AMD’s value proposition in the AI race is designed to be complete and comprehensive.

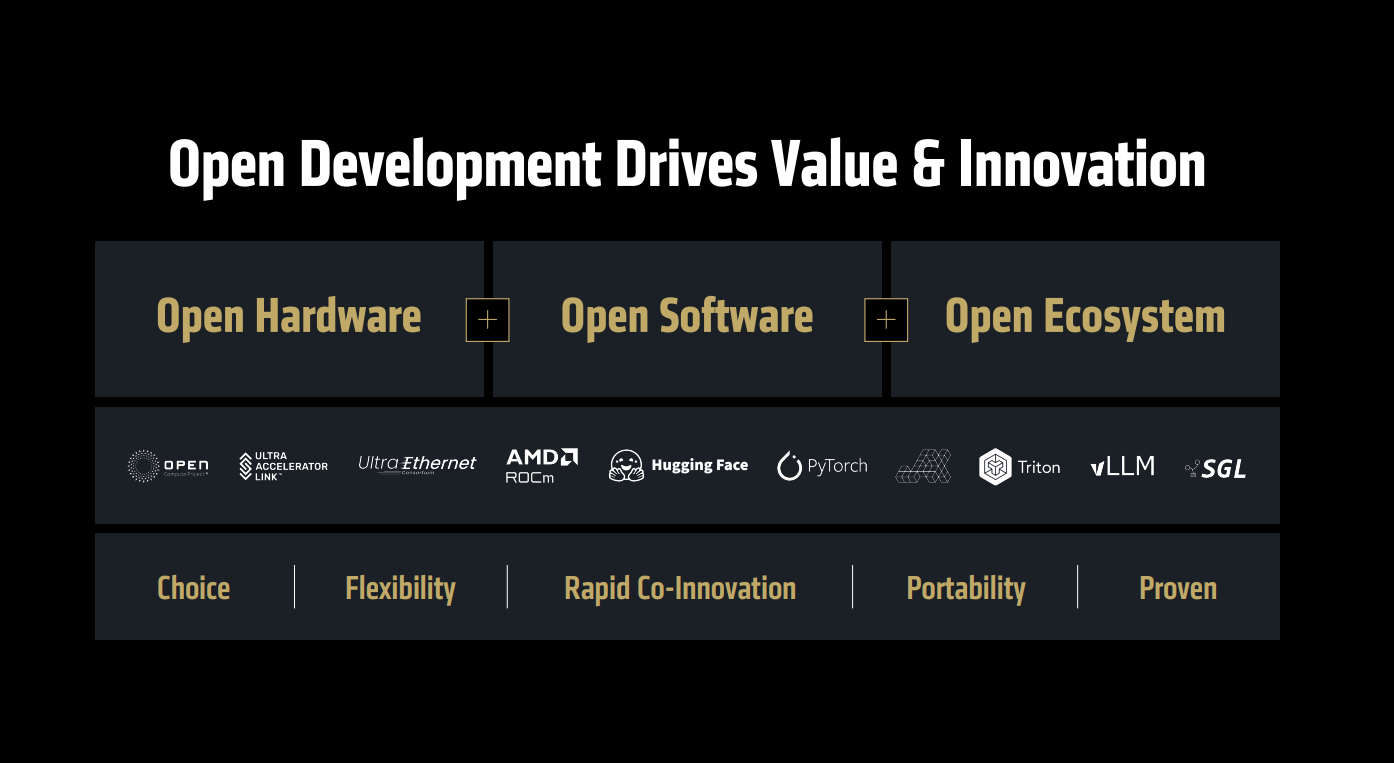

Compute leadership through its chips, the most open ecosystem with ROCm, and a full-stack solution that spans every layer of the data center, from CPU to server to rack design.

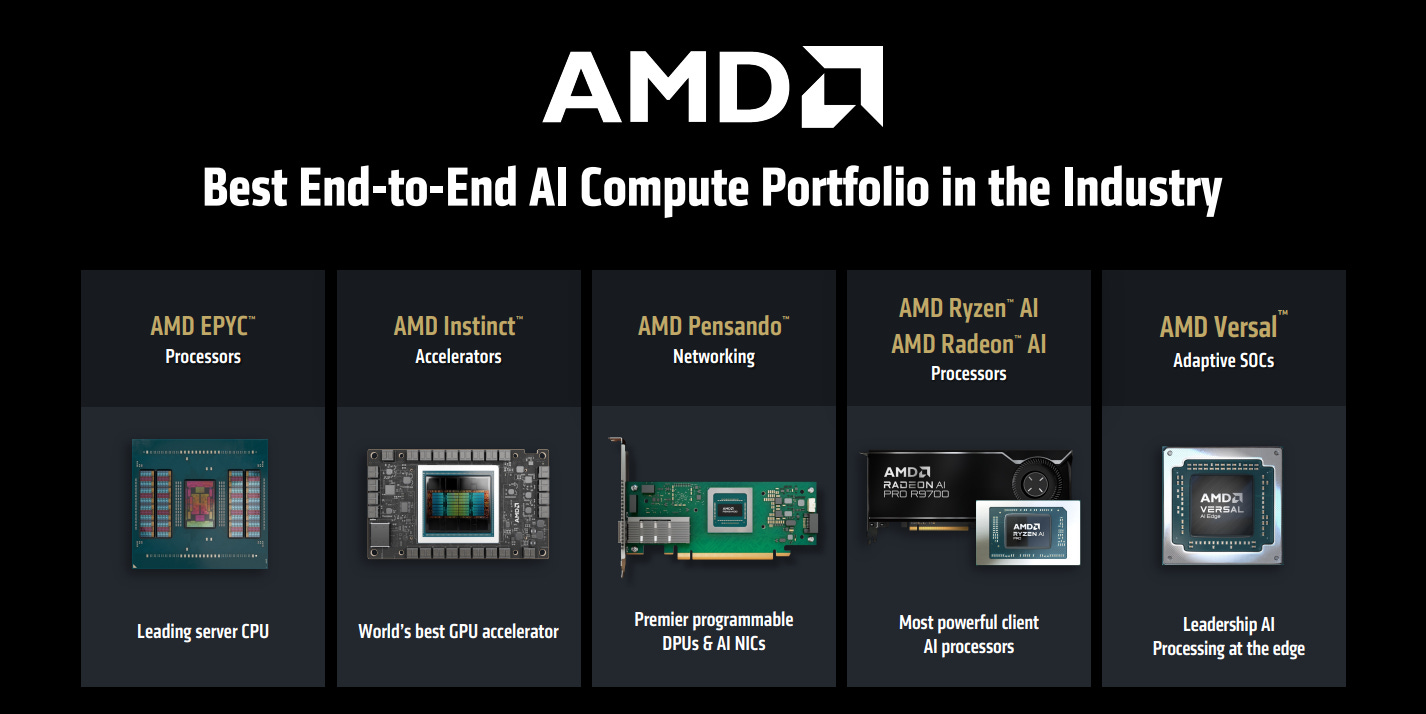

To achieve this, AMD is building a complete portfolio across all key components:

A lineup designed to rule them all, from industry-leading EPYC processors to edge AI with AMD Versal FPGAs.

All of it built within an open-source, collaborative ecosystem alongside key partners:

Open hardware through the UA Link initiative. Open software integrations with platforms like Hugging Face, PyTorch, and Triton (by OpenAI). And an open ecosystem centered on ROCm, rapidly evolving to fully leverage the potential of open source and challenge CUDA head-on.

So, how did AMD manage to significantly improve its software stack, build a complete hardware solution, and address every layer of AI infrastructure?

Through strategic acquisitions and targeted investments:

AMD acquired the largest FPGA company in the world, Xilinx, along with ZT Systems to design servers and racks that integrate its leading compute components into a unified system.

They brought in Nod.ai and Brium to accelerate improvements in ROCm, and added Silo AI and Lamini to support enterprises in deploying models at the edge. Mipsology was acquired to unlock the full potential of FPGAs in AI, while Enosemi is helping develop photonic interconnects, the future of high-speed connectivity. With Pensando, AMD strengthened its capabilities in DPUs and NICs, enhancing system performance and interconnect efficiency.

In short, AMD is laying the foundation for a multi-trillion dollar company.

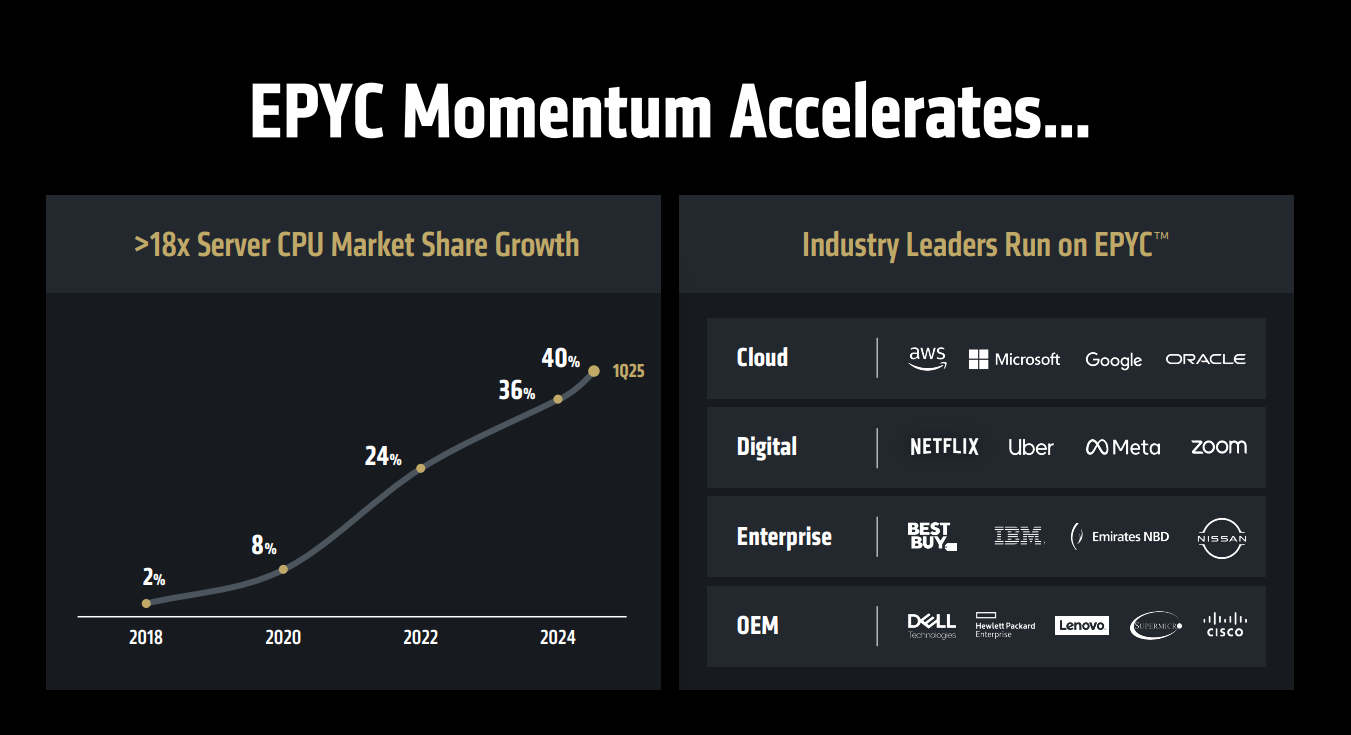

Its leadership in data center CPUs is unparalleled:

40% market share, an 18x increase since 2018. This is the type of growth we could see in GPUs in the coming years.

AI Accelerators

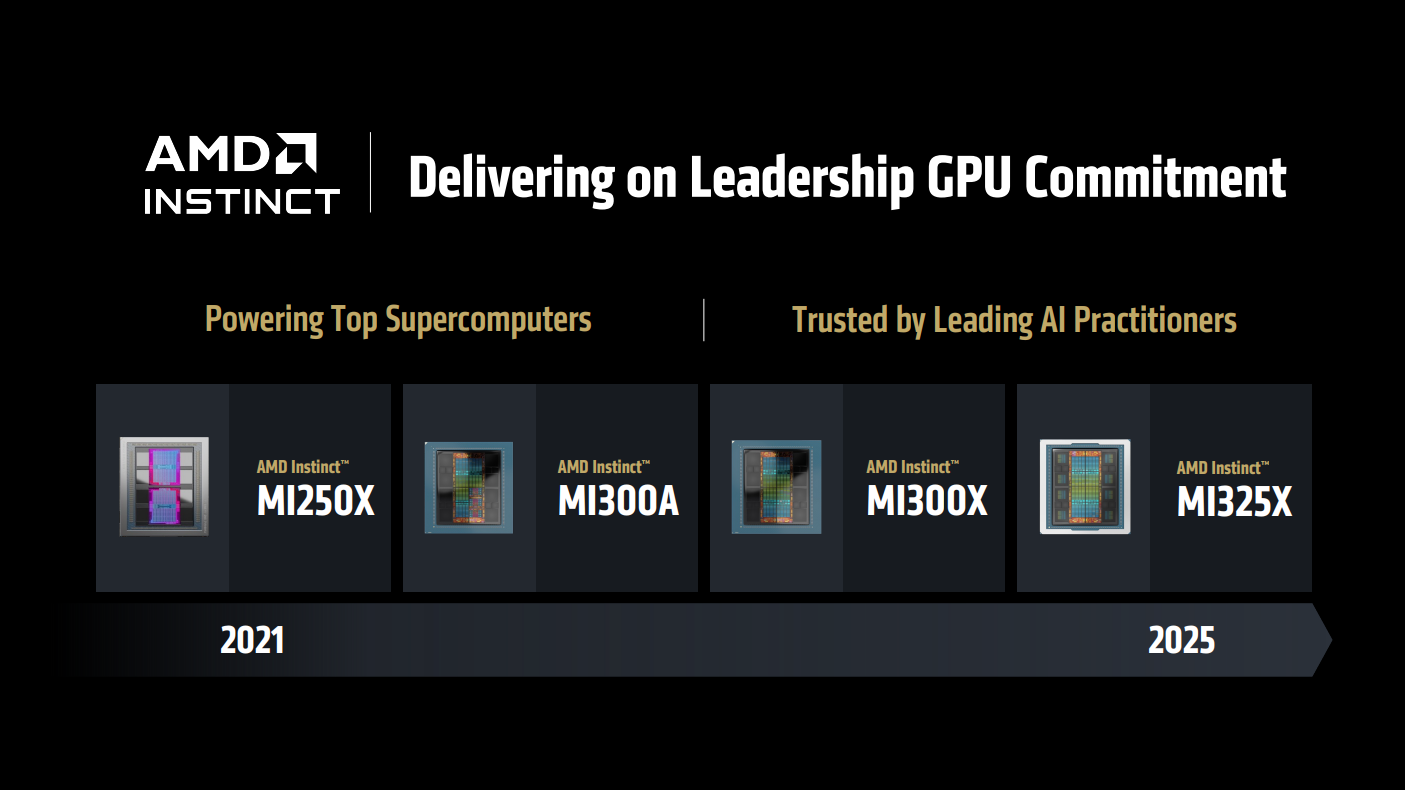

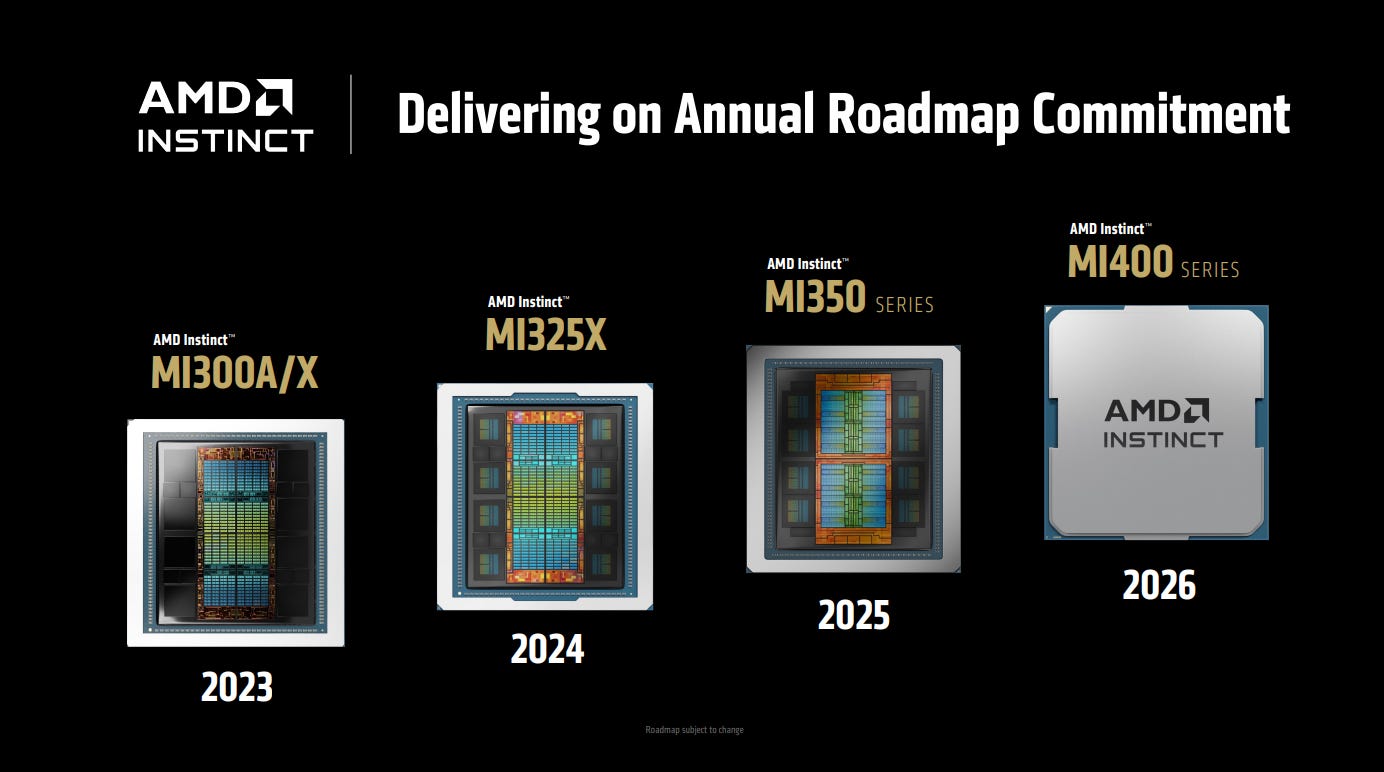

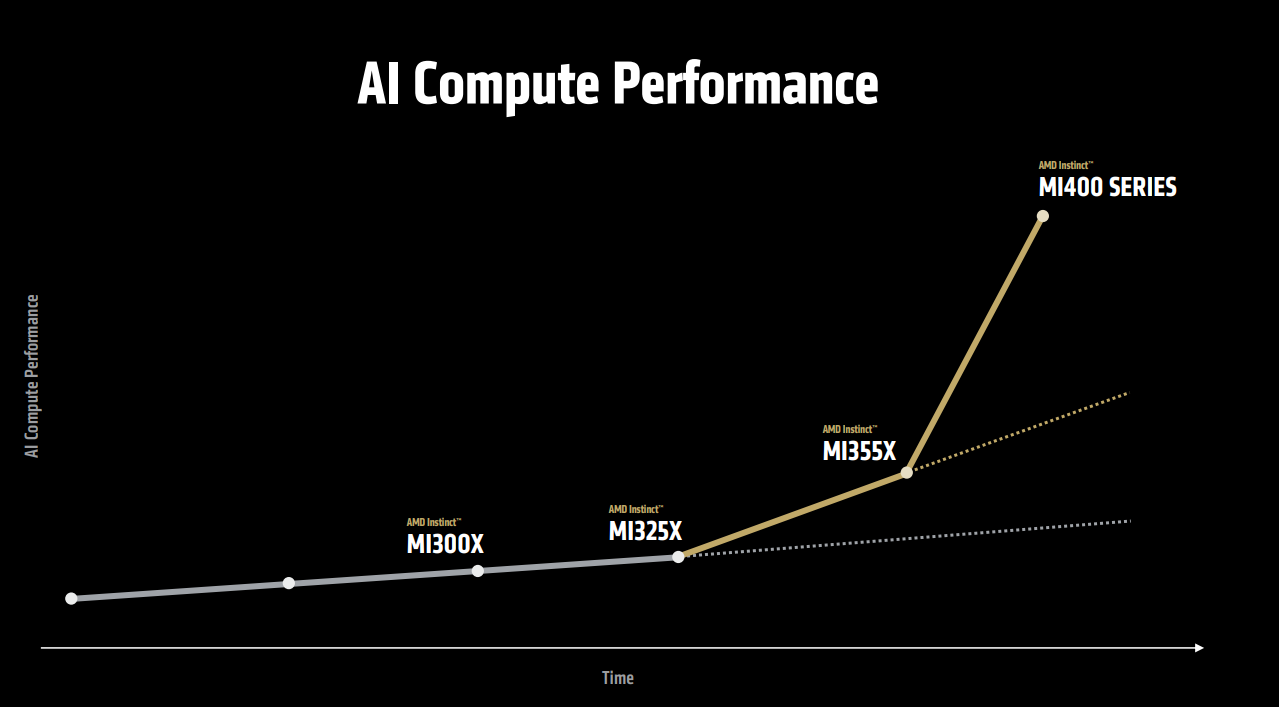

Now, let’s turn to AI Accelerators. AMD has made tremendous progress in a short time, but what’s even more compelling is what comes next.

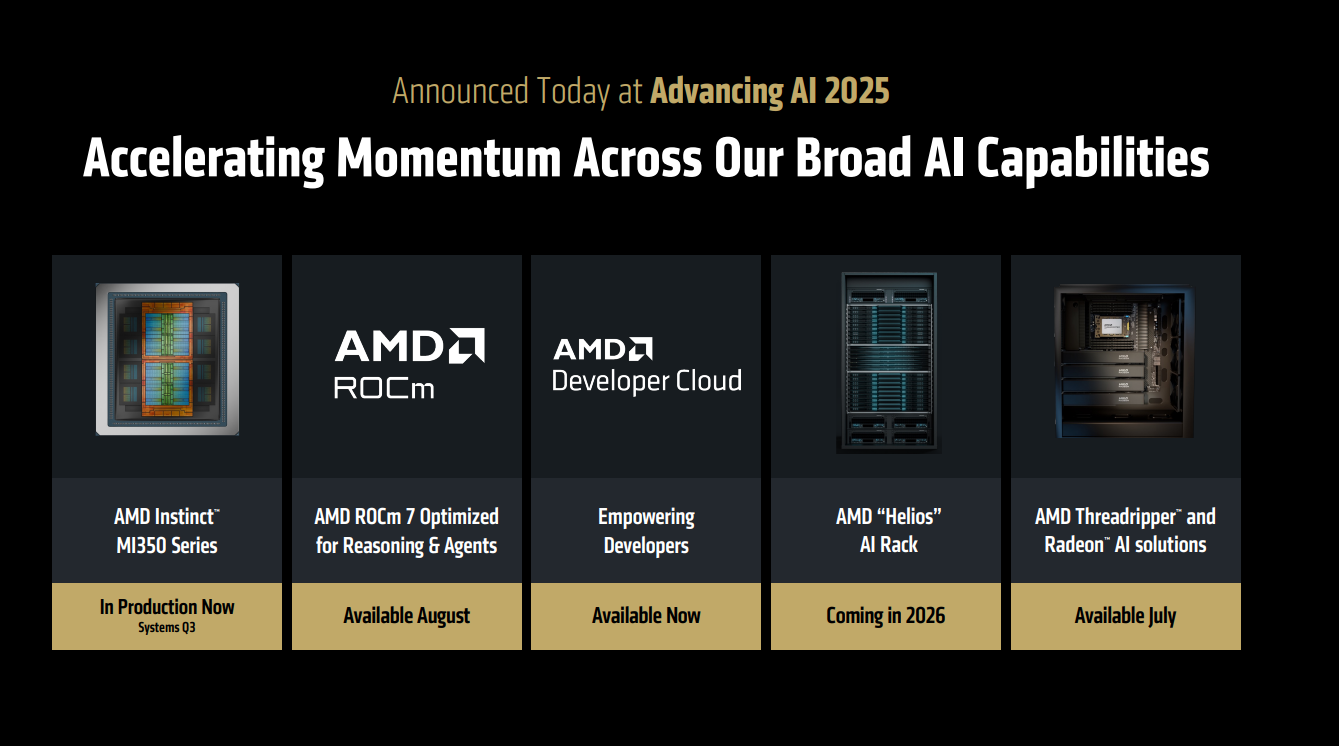

And that’s where the MI350 series comes in, launching in Q3 this year, followed by the MI400 series in 2026.

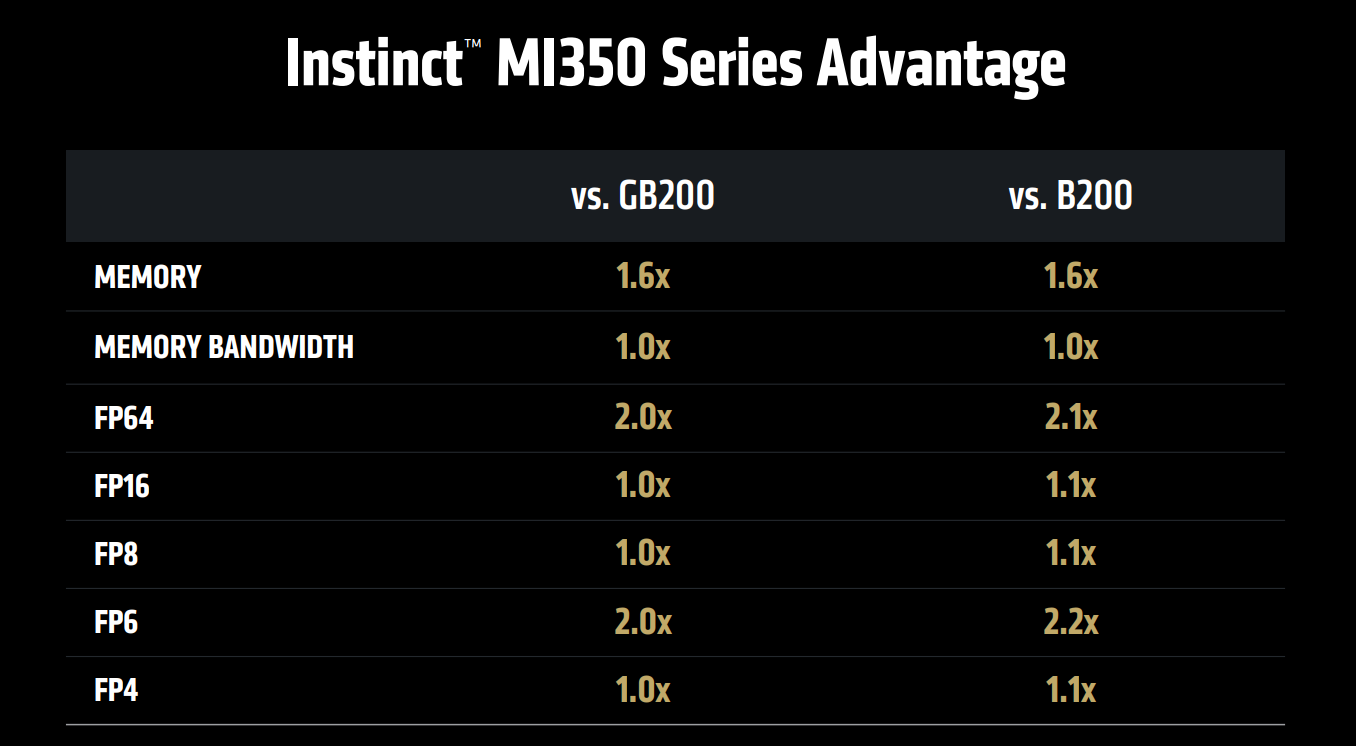

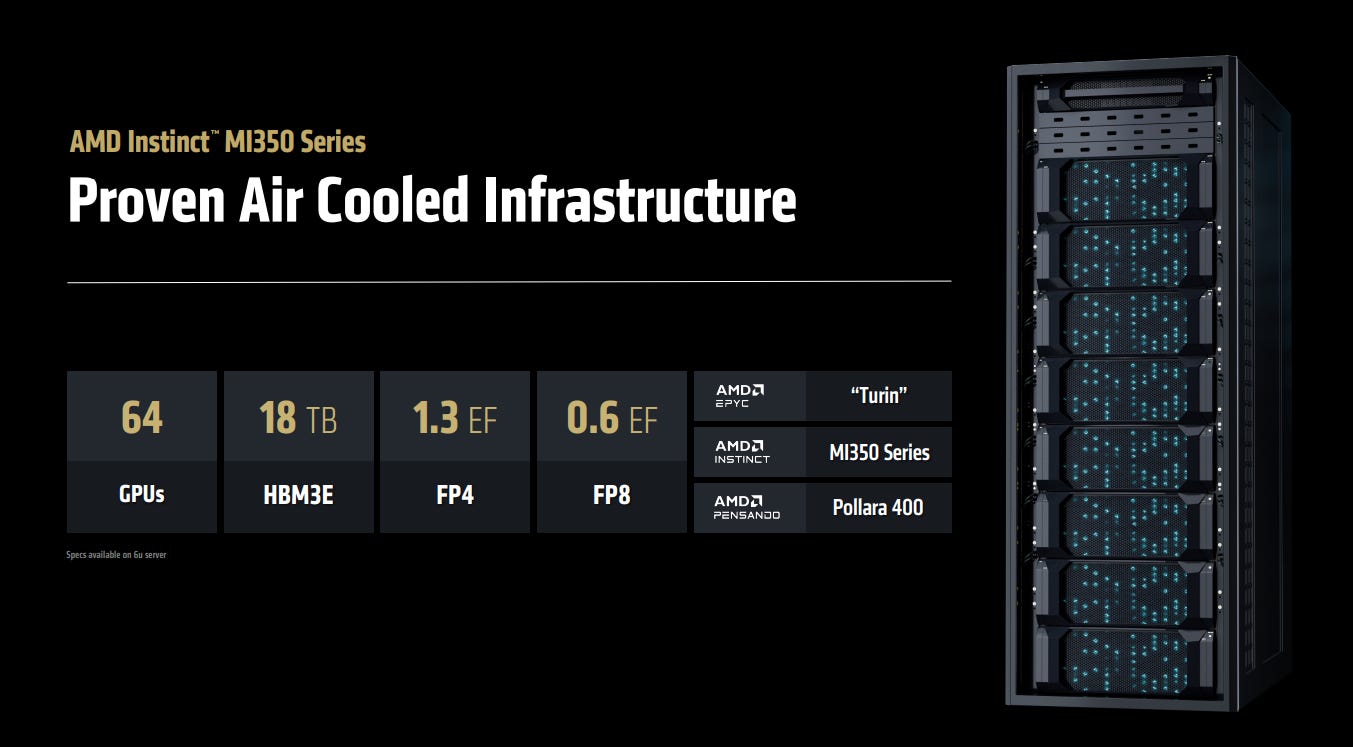

The MI350 series represents a tremendous leap in technology, with specifications that, in some cases, surpass NVIDIA’s offerings:

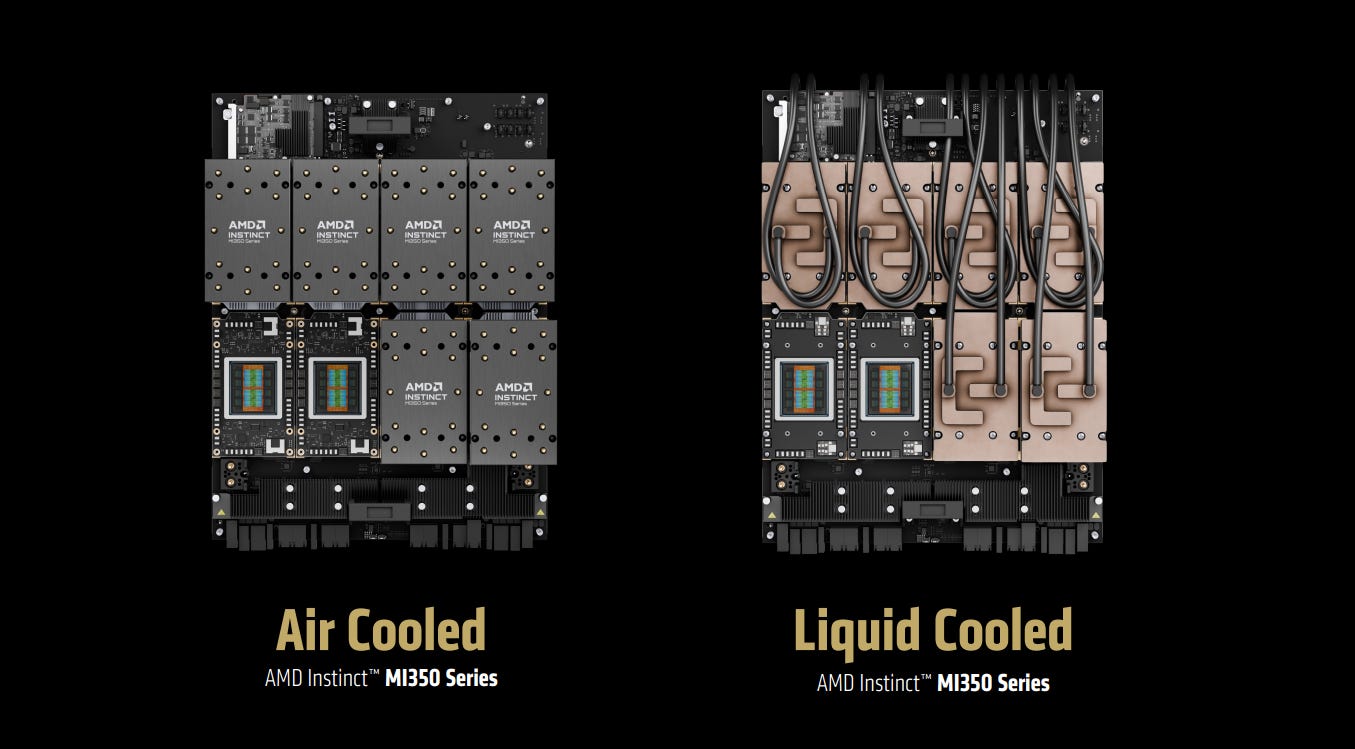

It will be available in two versions: air-cooled and liquid-cooled:

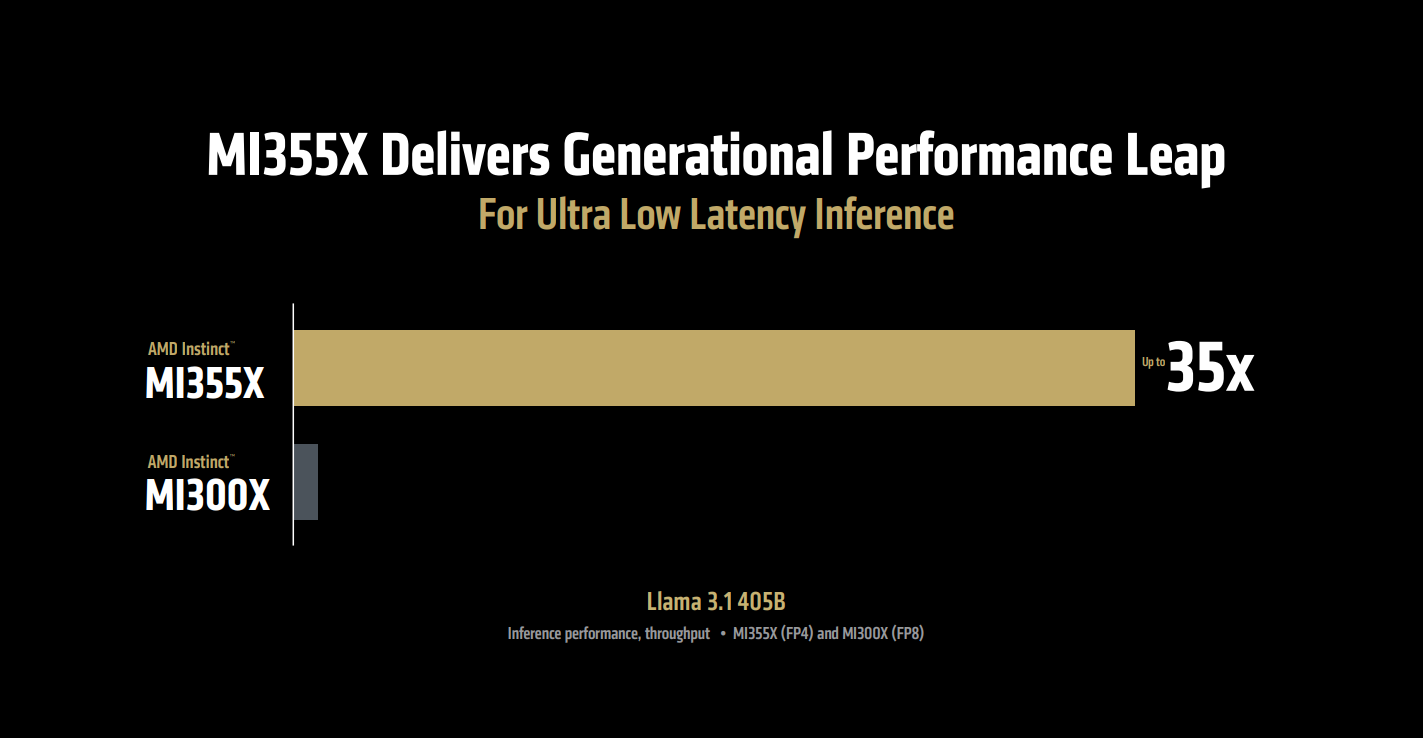

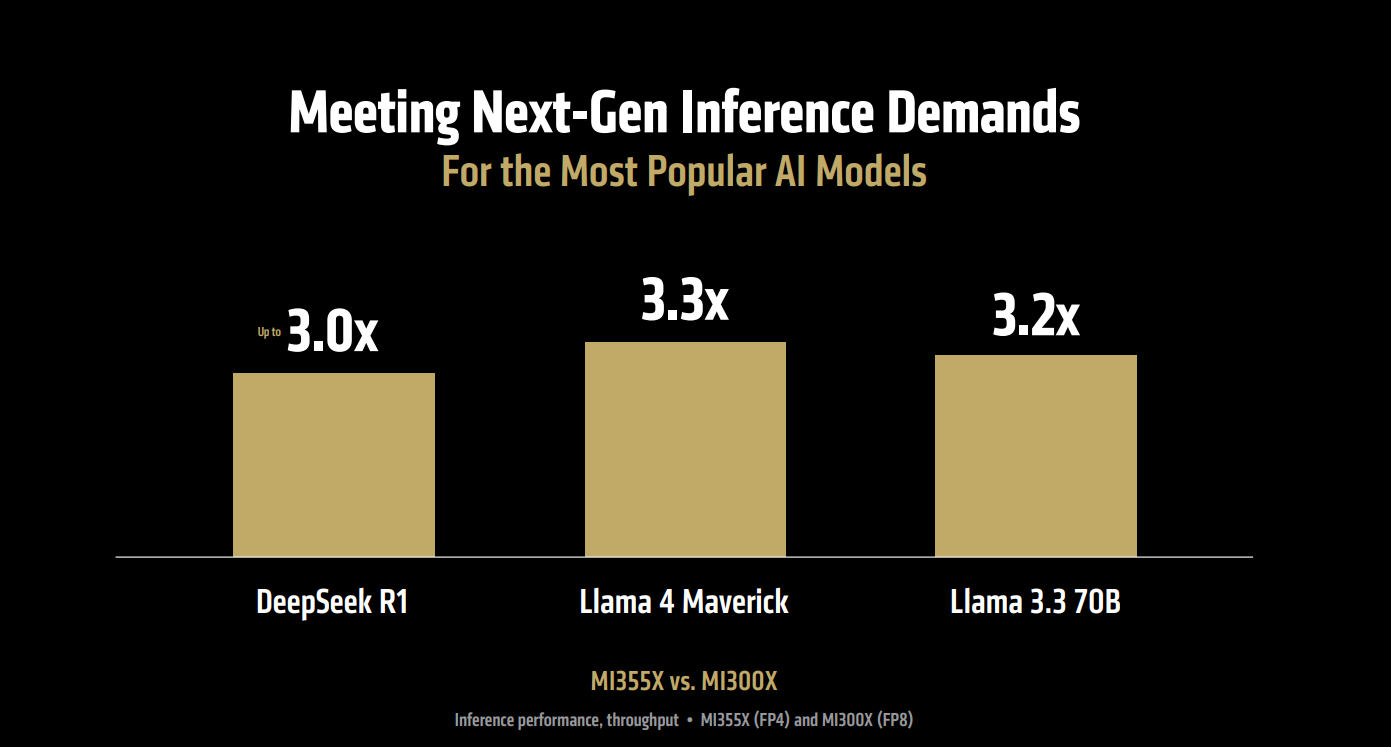

And the performance is tremendous:

Offering over 3x the performance of the previous generation. Keep in mind, the MI300X was already adopted for inference by Meta, OpenAI, and Microsoft, among others, yet the MI355X blows it out of the water.

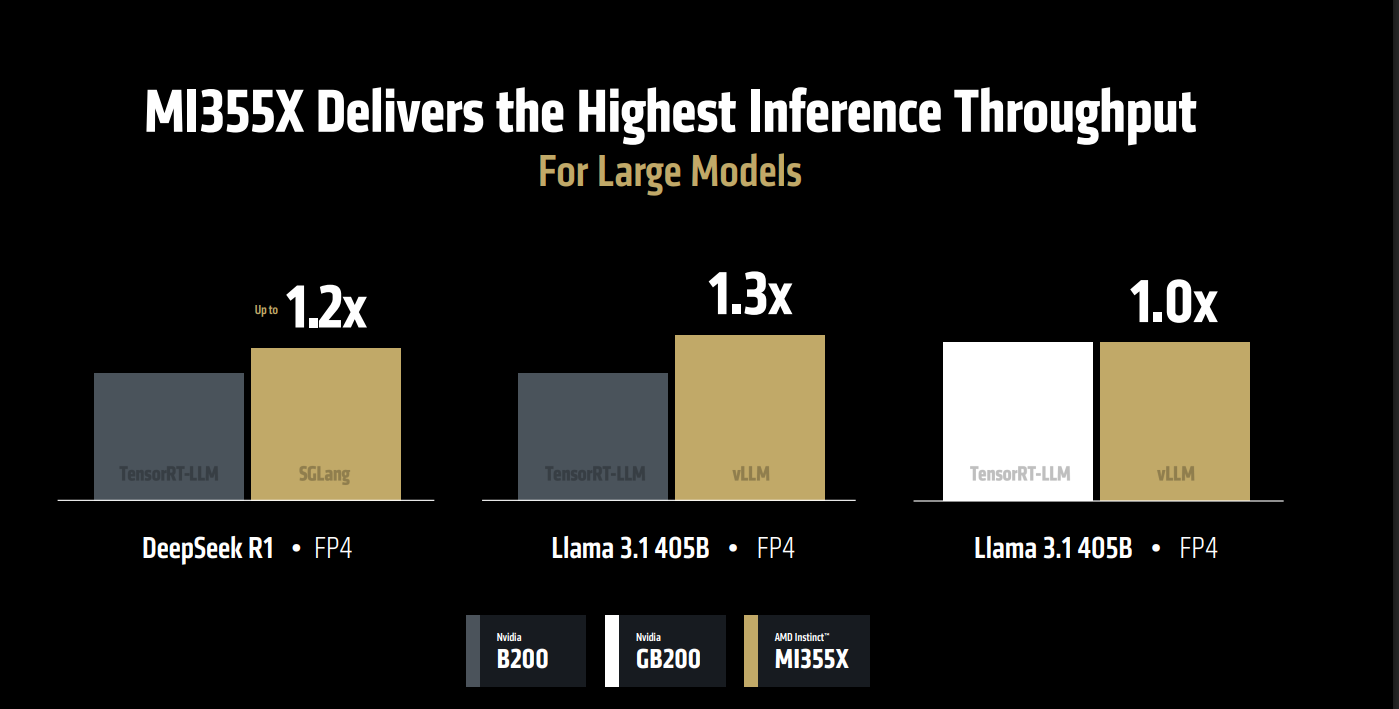

And how does it stack up against NVIDIA in some benchmarks? Very well:

Impressive performance, delivered through a system that's significantly more affordable than NVIDIA’s, bringing the total cost of ownership per token down by 40% in comparison:

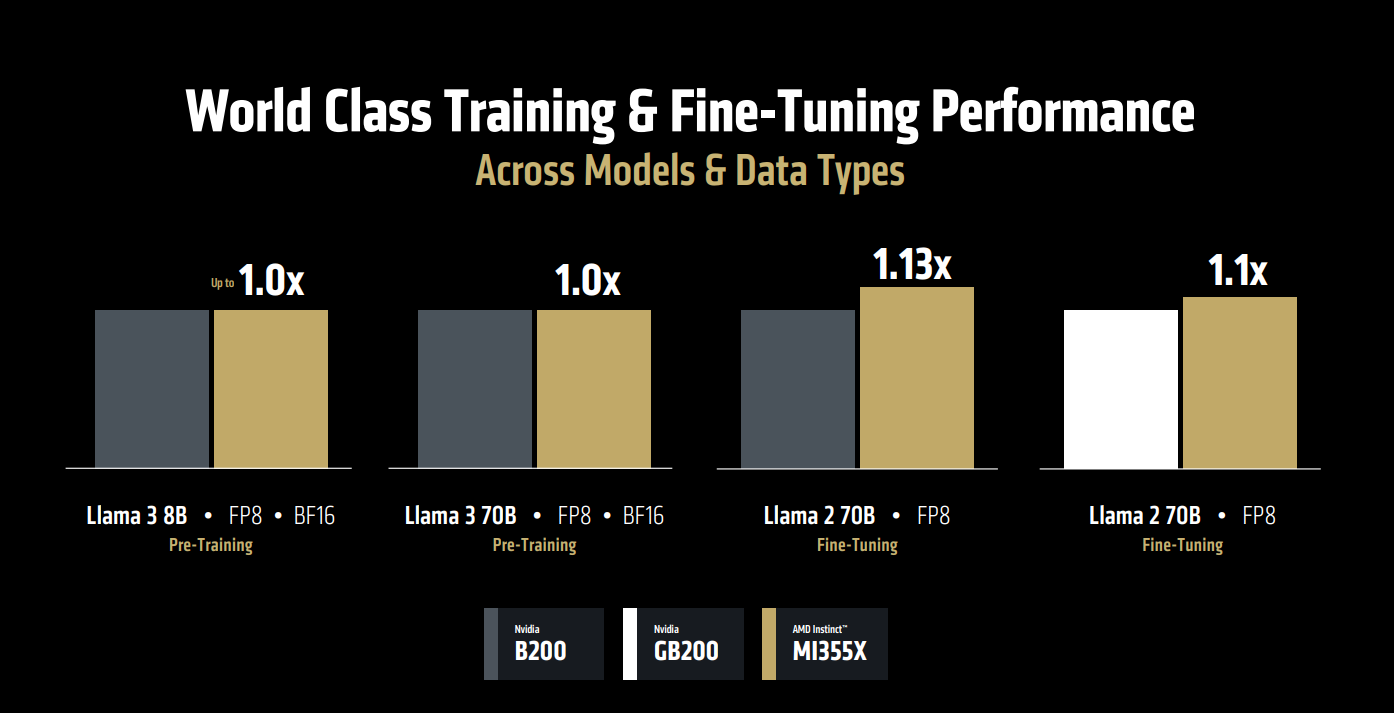

Well, that’s inference, but what about training? Is AMD still far behind in that area?

Not anymore:

So, AMD’s message is clear: Inference will be far bigger than training, and we’re leading that space, but don’t underestimate our position in training either.

With the MI355X at its core, AMD introduced its first full rack system:

Available in both air-cooled and liquid-cooled versions.

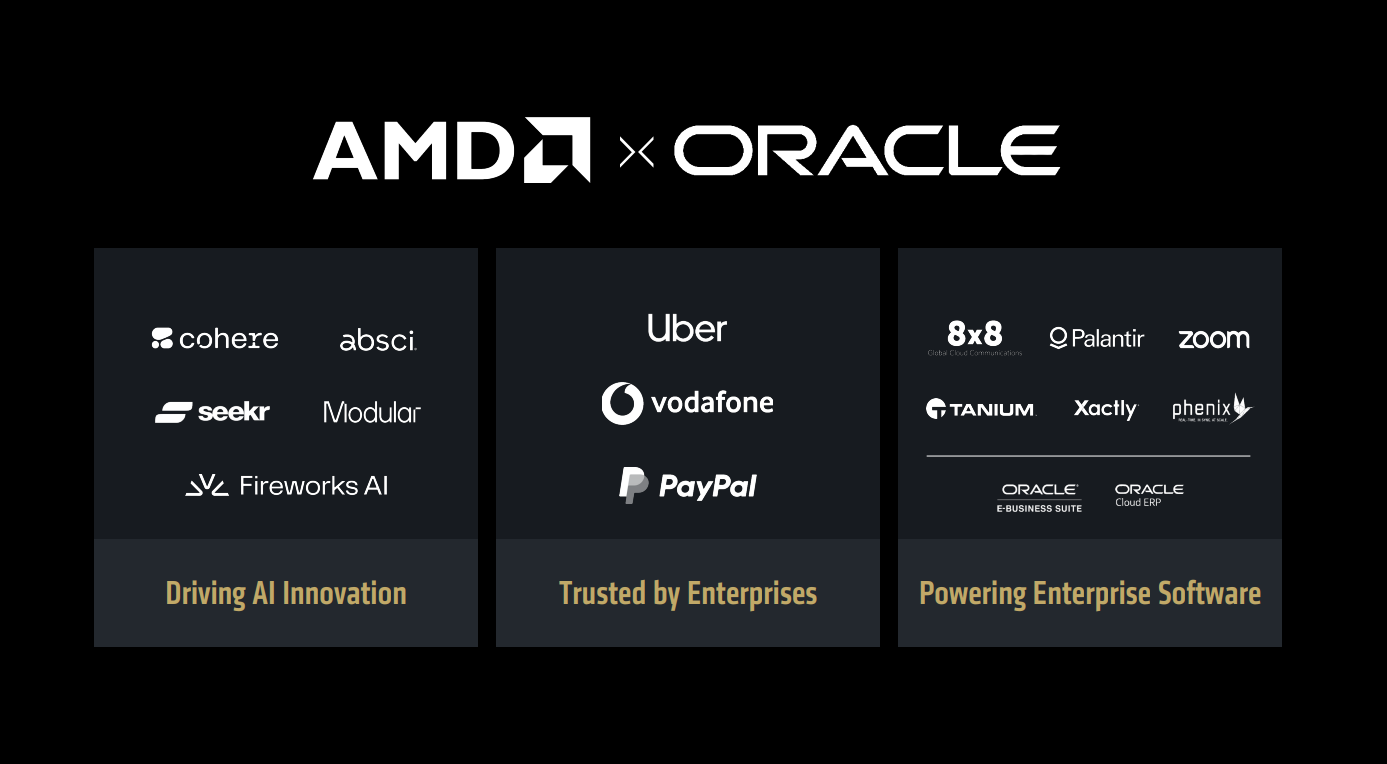

Microsoft has already shown strong interest in the system. xAI has announced a purchase, and Meta and Microsoft are likely to follow. Meanwhile, Oracle has revealed plans to build zetascale clusters using these AMD systems, with over 100,000 GPUs.

The MI355X is a remarkably impressive system, and demand is already strong. In addition to Oracle, Crusoe has placed a $400 million order, and other neocloud providers like TensorWave, Vultr, and Hot Aisle, among others, are also preparing to offer the system.

However, there’s a catch. This system isn’t a true rack-scale architecture on par with NVIDIA’s NVLink-based solutions. It doesn’t enable all GPUs to operate virtually as a single, unified supercomputer, the way NVIDIA’s GB200 NVL72 does.

Instead, it follows a more traditional structure, made up of servers with 8 GPUs each, limiting scalability compared to NVIDIA’s high-end systems.

But this is set to change with the arrival of the MI400 series.

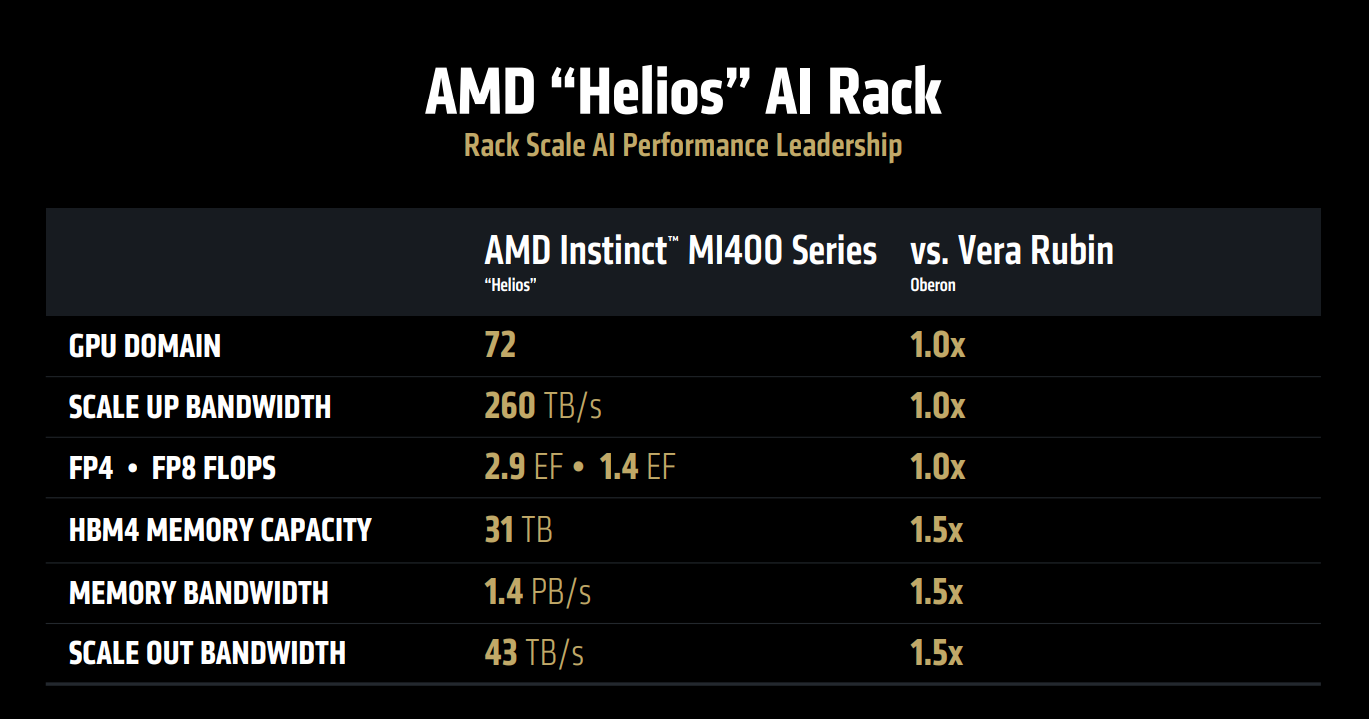

“True” Rack Scale

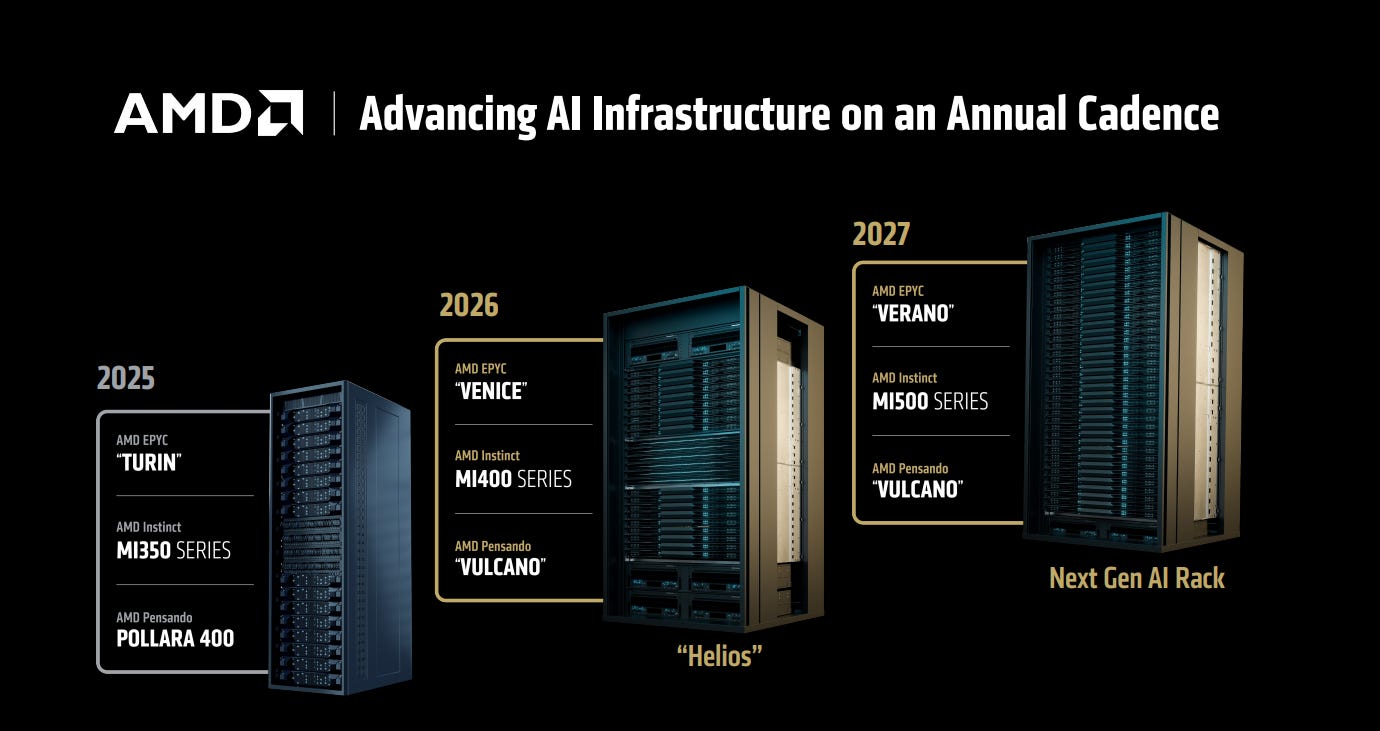

AMD’s first “true” rack-scale solution, codenamed Helios, will feature up to 72 fully interconnected GPUs, powered by the upcoming MI400 series accelerator, a next-gen EPYC processor, and a Pensando NIC.

This system is designed to match the scalability of NVIDIA’s Vera Rubin, and with AMD’s memory bandwidth advantage and tremendous performance benchmarks, especially in inference, it’s not far-fetched to say that what could be ending isn’t just NVIDIA’s monopoly in large-scale systems, but potentially its leadership position as well.

The compute performance that AMD is guiding to speaks for itself:

The interest in a rack-scale system capable of surpassing NVIDIA’s is so strong that Sam Altman took the stage to announce that OpenAI is collaborating with AMD on the development of the MI400 series.

Having OpenAI as a major customer for the MI400 would be a huge milestone for AMD, and if they deliver, it’s not far-fetched to say they’ll need to reserve a spot among the trillion-dollar companies by market cap. Because OpenAI won’t be the only one interested.

AMD is taking this step by step. First, it focused on building the best chips. Then came the server and rack design. Now, it's aiming for the ultimate goal: delivering the best solutions at every scale, from large-scale data centers and enterprise environments to laptops and desktop PCs.

The final announcement in this section was the most impressive and important of all.

AMD revealed its upcoming MI500 Series accelerator, which will debut with a next-generation rack system featuring 256 interconnected GPUs, surpassing NVIDIA’s Rubin Ultra, expected around the same time in 2027, which is designed for 144 GPUs.

Outscaling NVIDIA by such a margin would be unprecedented. Now it’s a matter of execution and time, but one thing is clear: the future looks very exciting for AMD.

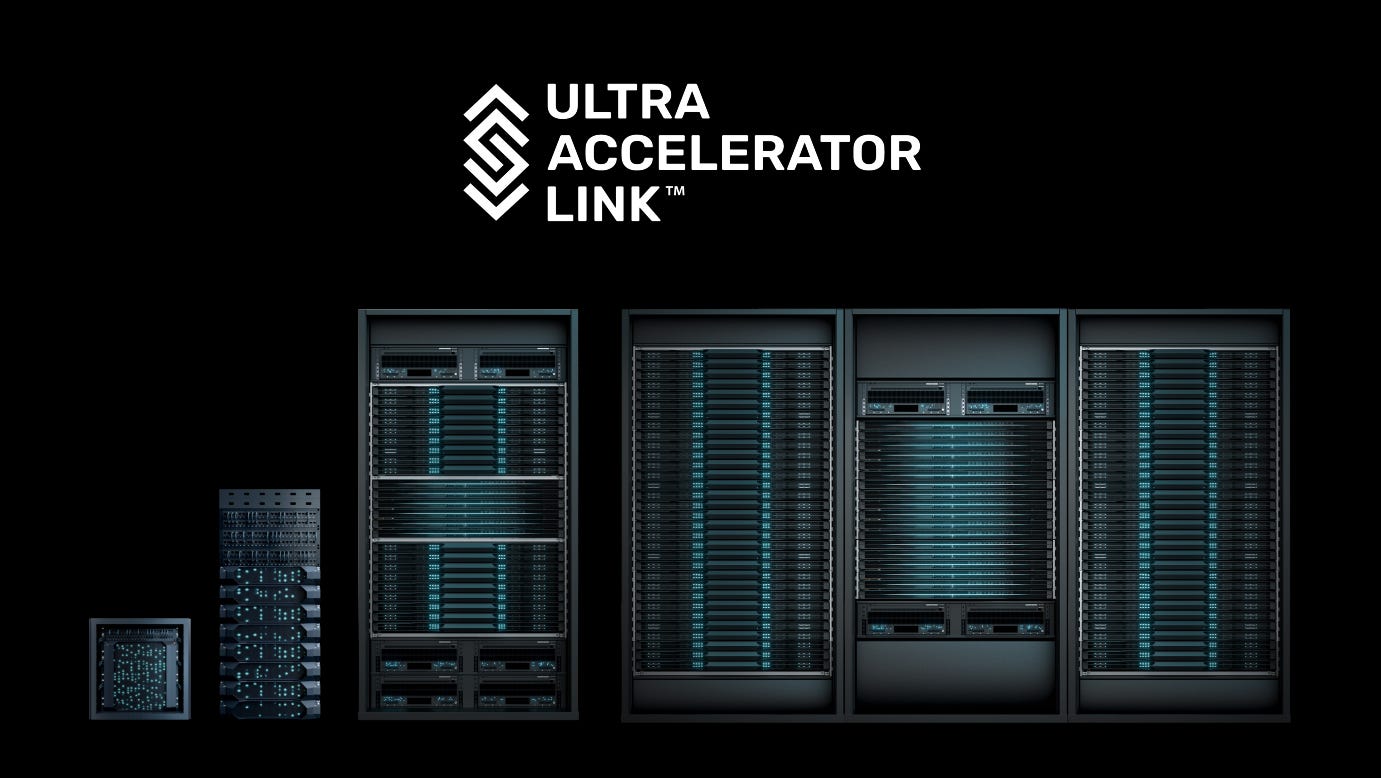

UA Link

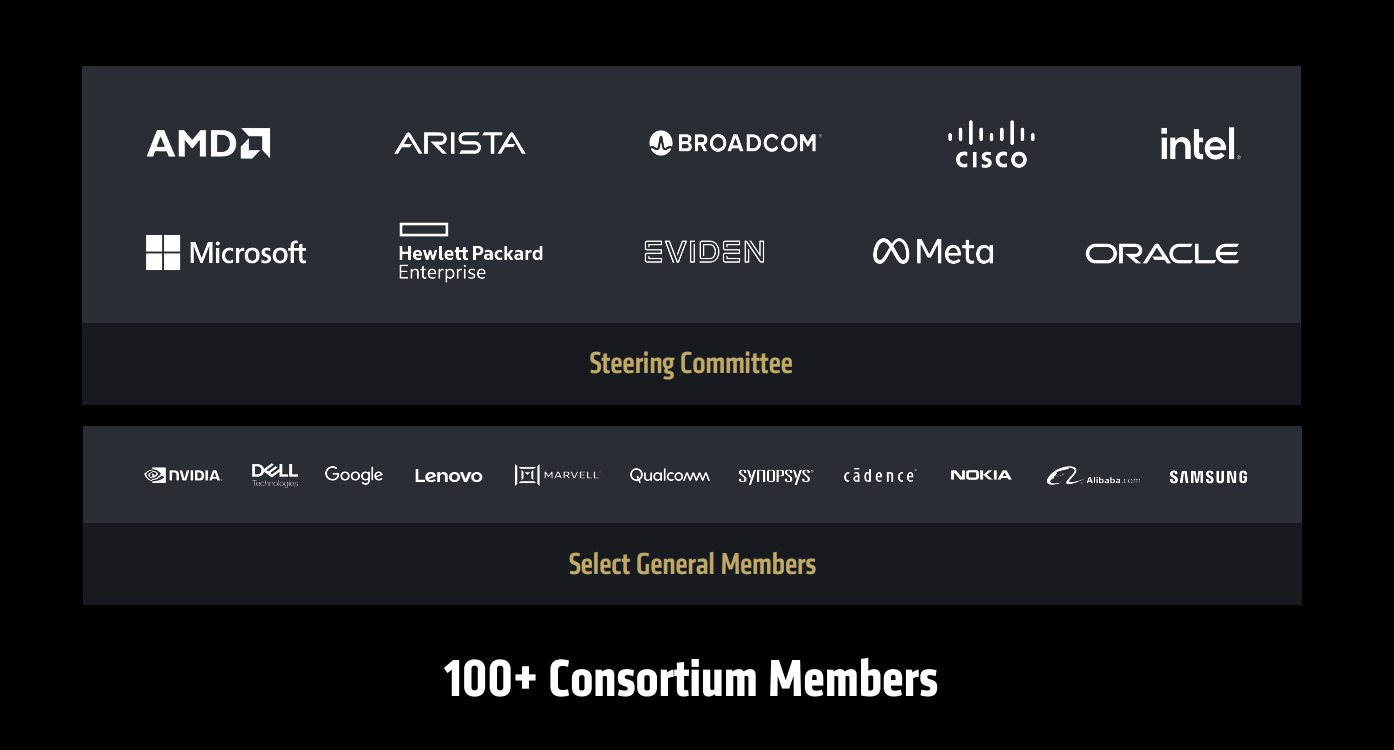

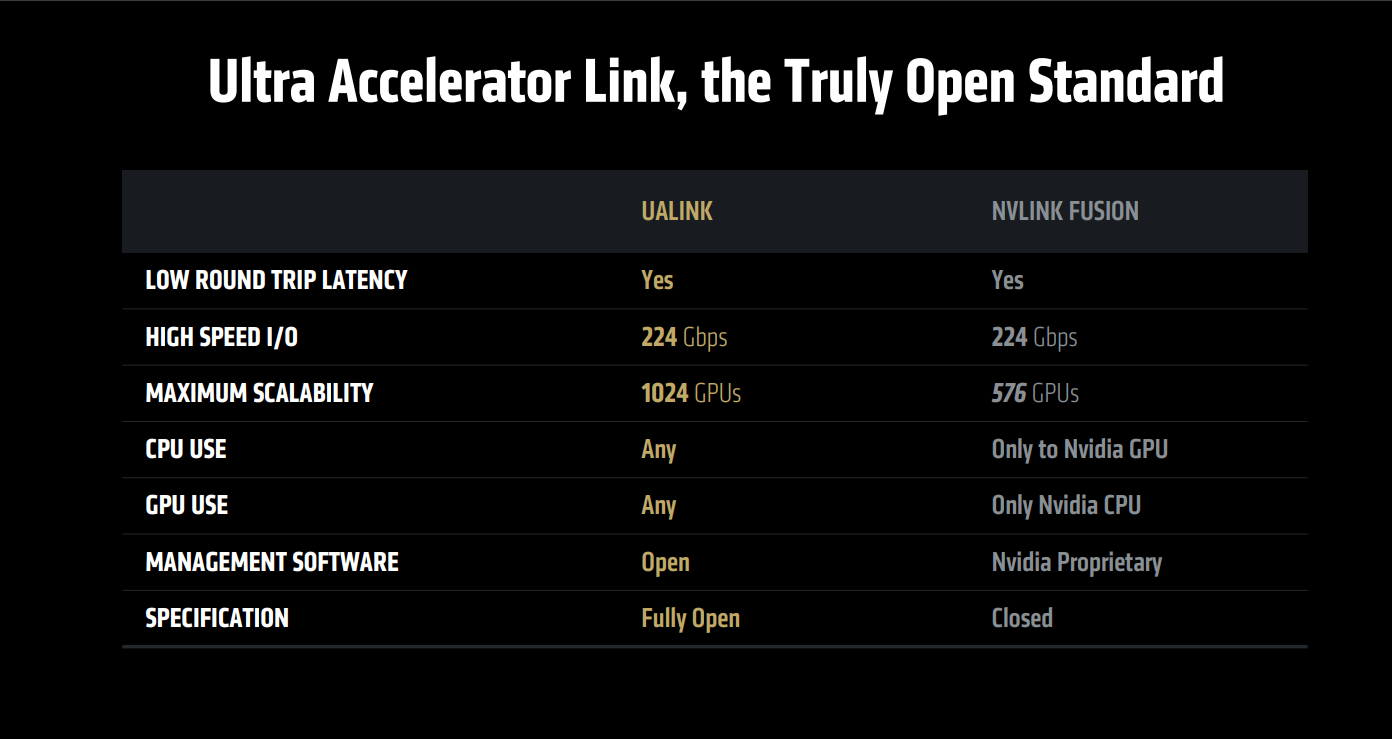

And how will this level of scalability be achieved? By leveraging the UA Link consortium.

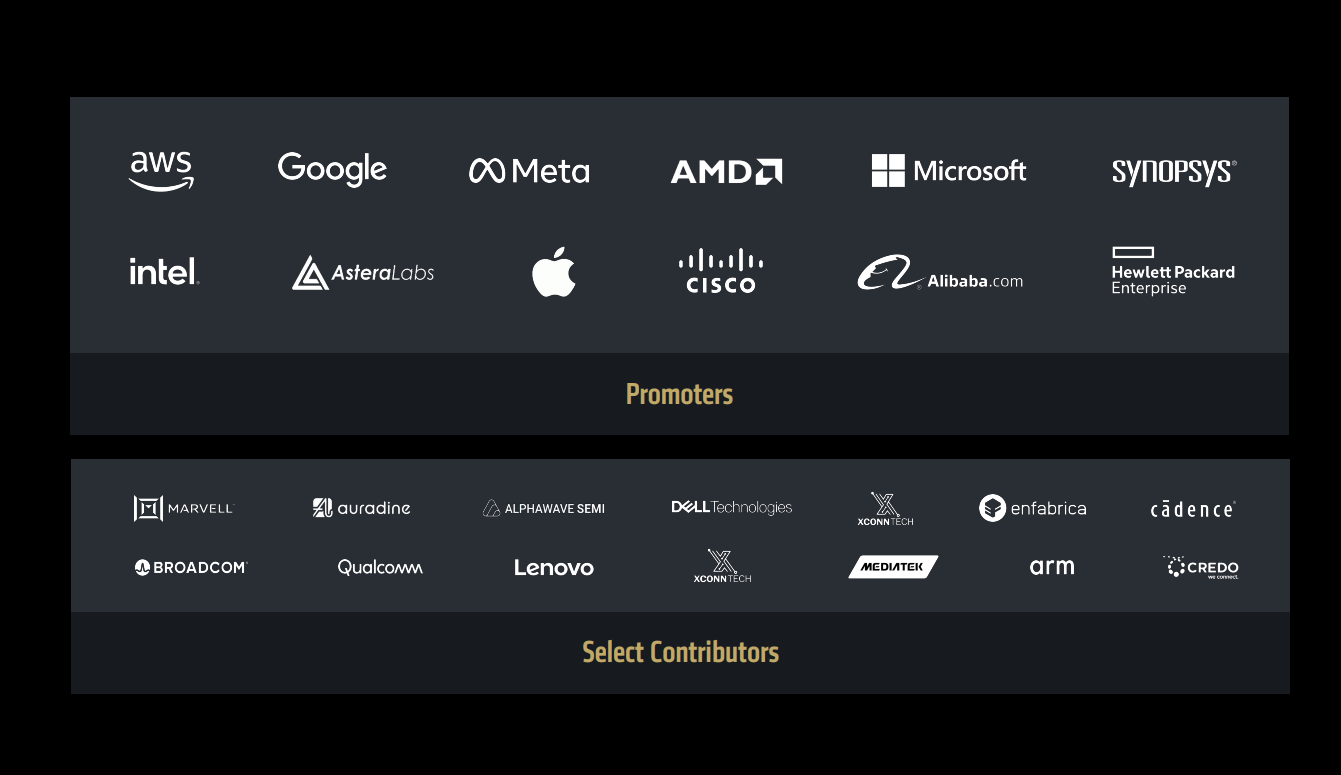

The UA Link consortium brings together some of the world’s largest tech companies, united by a common goal: establishing a universal standard for AI systems connectivity.

As I explained in my AMD deep dive, companies are tired of depending on NVIDIA. They’re tired of sustaining NVIDIA’s massive profit margins, simply because of its monopoly on interconnect technology, NVLink. And the reality is simple: as big and capable as NVIDIA is, it can’t compete against the combined force of the world’s largest tech companies.

UA Link has already released its first industry specification, and early testing shows very promising performance:

In the long term, NVIDIA won’t be able to compete with this. The industry is clearly moving toward an open standard, and as that shift takes hold, NVIDIA will lose its edge in this area. This will unlock the door for more companies to deploy large-scale data center GPU infrastructure, with AMD positioned to benefit the most.

Software Ecosystem

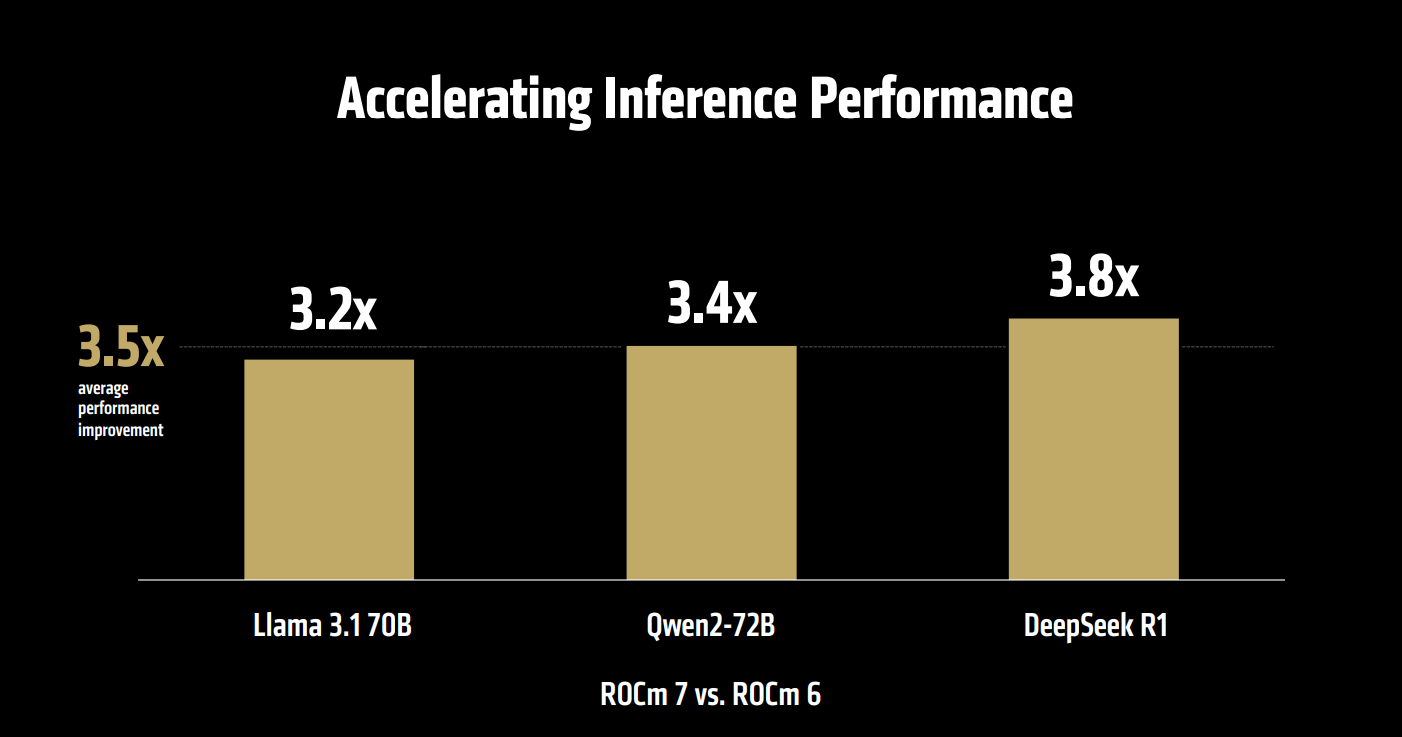

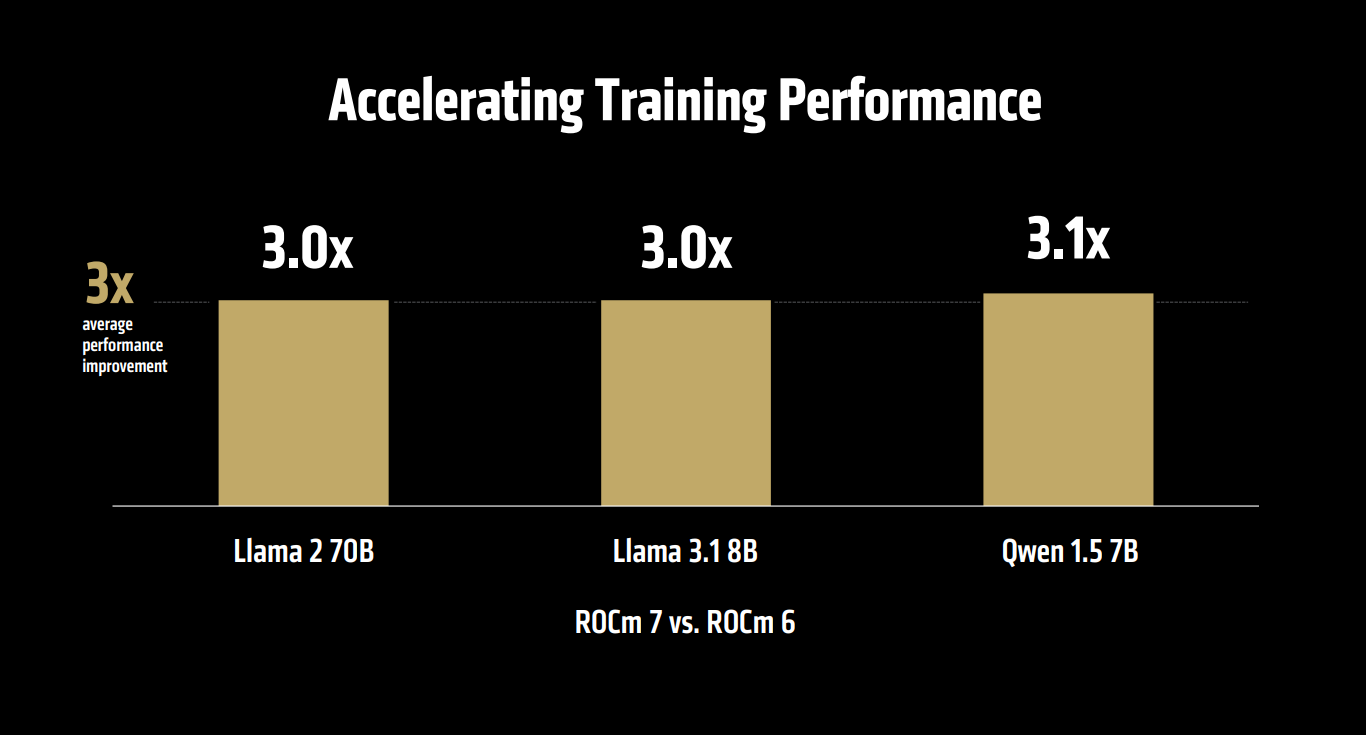

AMD also dedicated a significant section to software advancements. They introduced ROCm 7, delivering a major leap in performance compared to the previous generation:

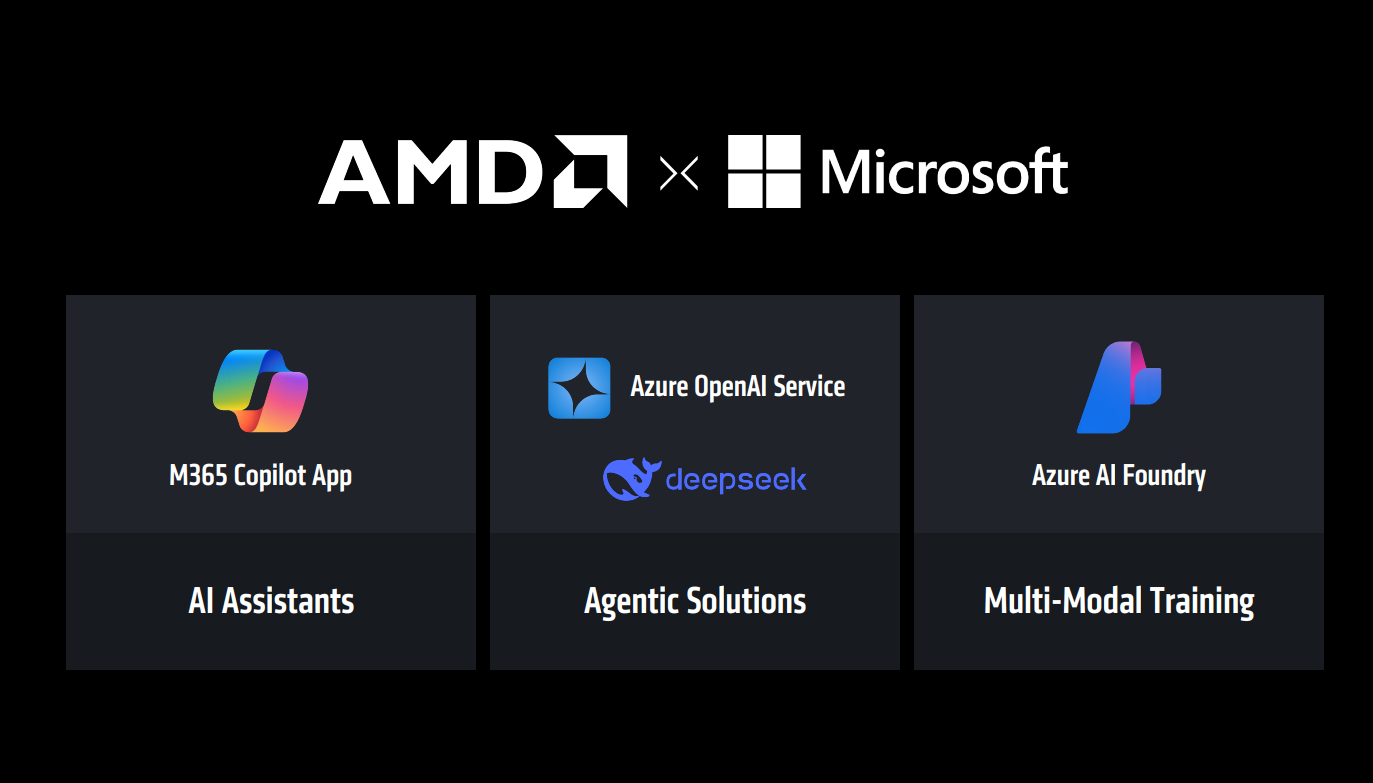

AMD is also collaborating with Microsoft, who is not just a client but a strategic partner, working together to enhance AMD’s open-source software stack and unlock maximum value in inference scenarios.

The improvements aren’t limited to hardware, ROCm 7 is delivering a significant performance boost:

ROCm isn’t on the same level as CUDA yet, but it’s improving rapidly, precisely because it’s open source. Catching up is always easier than inventing from scratch, and the fact that NVIDIA spent 20 years building CUDA doesn’t mean ROCm will take just as long. In many cases, ROCm is already perfectly usable and delivers strong performance, and at this pace, it will soon be seen as an advantage rather than a weakness.

The integration of Triton, OpenAI’s open-source stack, is another major boost for AMD. Triton simplifies the developer experience, automating and streamlining workflows while abstracting much of the complexity of CUDA/C++ programming.

In the mid to long term, Triton will also help unify the software stack across vendors, making it easier to migrate between hardware platforms, weakening CUDA’s moat and reducing vendor lock-in.

AMD has been actively pushing for broader adoption of its chips among developers. If developers don’t learn to use the ROCm stack early on, they’re unlikely to choose it at any point in their careers. That’s why increasing adoption and expanding the open-source, collaborative ecosystem is essential.

To support this effort, AMD announced the AMD Developer Cloud, a platform designed to give developers hands-on access to AMD hardware and tools.

Here’s a brief explanation from Anush Elangovan, AMD’s AI Lead:

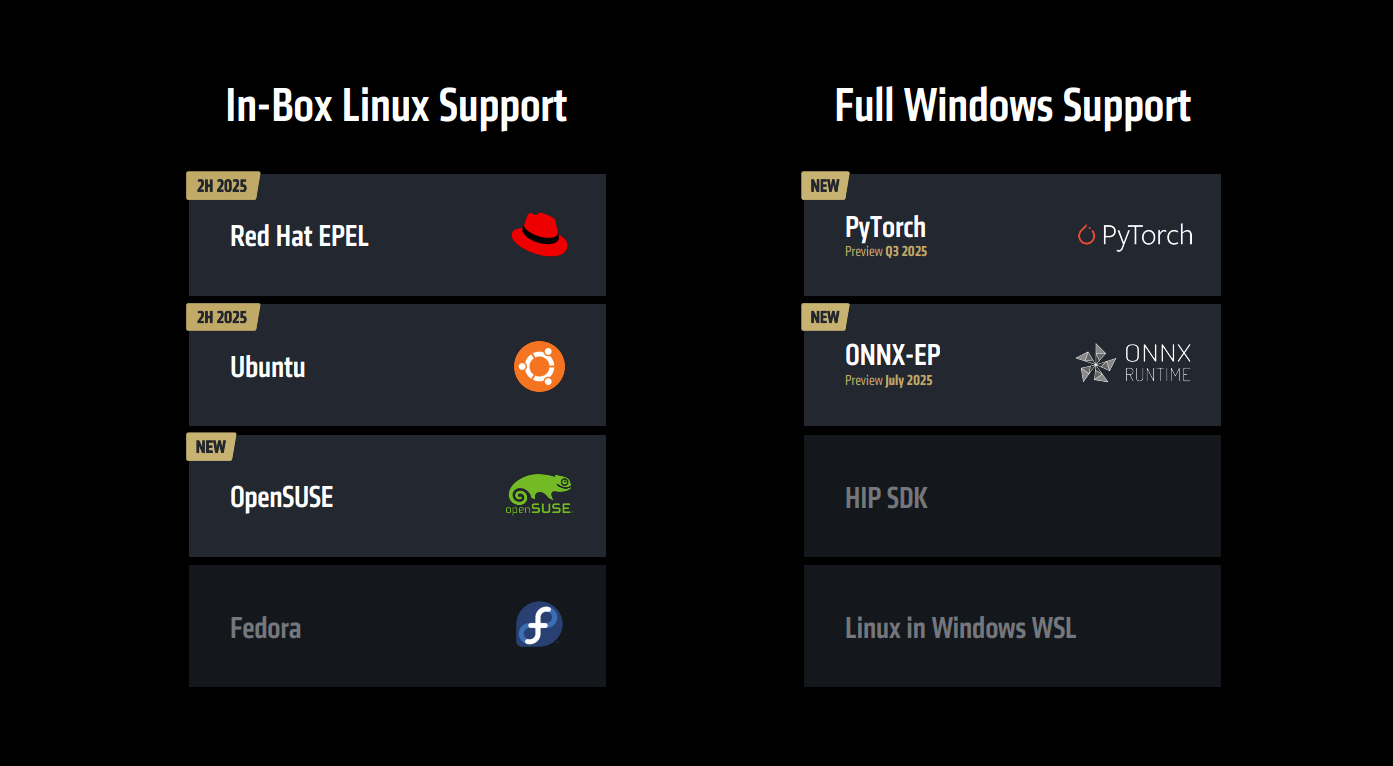

AMD is also expanding ROCm support to its client AI systems, a move that will further accelerate the adoption and popularization of its software stack among developers and enterprises alike.

Considering how powerful AMD’s client AI systems are, the lack of ROCm support was a clear waste of potential.

Now, that’s about to change.

Conclusion

This is a summary of the key highlights from AMD’s Advancing AI event:

In summary, the MI350 series is set to drive 2025 earnings growth. ROCm 7 and the launch of the AMD Developer Cloud will strengthen AMD’s position in the open-source AI ecosystem, while the Helios rack, arriving in 2026, gives AMD a real shot at claiming the top spot in the AI accelerator race.

A promising event, filled with impressive announcements, and a clear signal that AMD is accelerating its roadmap to push its share price back to all-time highs and beyond.